The evolution of AI Acceleration GPU Architectures fundamentally transforms the artificial intelligence landscape. This revolution, in fact, stems from relentless innovation in graphics processing unit (GPU) designs. Originally designed for high-fidelity graphics, these specialized processors now actively power advanced AI models. Indeed, they drive everything from deep learning training to real-time inference. New architectures thus push boundaries in computational power, memory efficiency, and software integration. Consequently, they redefine what’s possible for AI hardware. This article explores the critical innovations, key players, and overarching trends defining this rapidly evolving domain.

Why AI Acceleration GPU Architectures Are Indispensable

Artificial intelligence (AI), specifically deep learning, truly necessitates parallel computation. Training complex neural networks, therefore, involves numerous matrix multiplications and convolutions. Indeed, these operations must be performed simultaneously on huge datasets. Here, GPUs truly excel, making them ideal for neural network acceleration. In contrast, traditional Central Processing Units (CPUs) optimize for sequential tasks, typically utilizing only a few powerful cores. However, GPUs feature thousands of smaller, specialized cores. This architecture, consequently, allows them to handle numerous computations concurrently. As a result, they are ideally suited for data-intensive and parallel AI workloads, underscoring the importance of advanced AI Acceleration GPU Architectures.

Initially, early AI models certainly ran on CPUs. However, as neural networks grew significantly in size and complexity, computational demands soon exceeded CPU capabilities. GPUs, conversely, provided an exponential performance leap. They, therefore, quickly became the standard for AI development, showcasing their superior GPU AI capabilities. This fundamental shift was not accidental; instead, it highlighted the GPU’s natural ability to scale parallel operations. Modern AI Acceleration GPU Architectures now refine this inherent capability. Furthermore, they augment it with specialized AI-specific hardware, driving constant AI GPU innovation.

NVIDIA’s Enduring Dominance in AI Acceleration GPU Architectures

NVIDIA is synonymous with high-performance computing. Increasingly, moreover, this also applies to AI. Their comprehensive strategy, indeed, combines cutting-edge hardware with a comprehensive software ecosystem. Thus, they create an integrated platform specifically designed for AI development, leading the way in next-gen AI processing. This dominance is a testament to their continuous AI GPU innovation.

The Evolution of Tensor Cores for Deep Learning Hardware

The Tensor Core is, undoubtedly, a cornerstone of NVIDIA’s AI acceleration strategy. These specialized hardware units debuted in the Volta architecture. They were, in fact, specifically designed to speed up matrix operations, which are vital in deep learning, making them a key component of deep learning hardware. Since their inception, Tensor Cores have continuously evolved. They have, moreover, progressed through Turing, Ampere, and Hopper architectures. Consequently, they have become significantly more powerful and versatile, driving AI GPU innovation.

These cores, furthermore, enable mixed-precision computing. This technique is, indeed, vital for boosting AI performance. They perform calculations using lower precision data types; for instance, FP16 (16-bit floating-point) or BF16 (bfloat16) typically replace FP32. As a result, Tensor Cores achieve much higher throughput. This approach, therefore, drastically speeds up both training and inference tasks. It also, however, carefully maintains accuracy for complex models. Additionally, newer iterations support TF32 (TensorFloat-32) and even INT8/INT4 integer precisions. This, consequently, offers greater flexibility and performance gains for varied AI workloads, further solidifying AI Acceleration GPU Architectures as the bedrock of modern AI.

Hopper Architecture: A Leap for Neural Network Acceleration

The Hopper architecture represents a significant leap forward in AI Acceleration GPU Architectures. The NVIDIA H100 GPU, for example, exemplifies it. It boasts fourth-generation Tensor Cores, which are enhanced for the huge demands of Large Language Models (LLMs). Furthermore, they also support generative AI. The H100, moreover, introduces a “Transformer Engine.” This engine dynamically selects optimal precision for each transformer model layer. Consequently, this innovation delivers up to 6x higher performance, specifically for trillion-parameter model training using FP8 precision. Overall, therefore, this is a critical advancement for generative AI’s rapid expansion and for accelerating neural network acceleration.

The A100 GPU, in contrast, utilizes the Ampere architecture. It remains a workhorse, especially prevalent in cloud data centers. Its third-generation Tensor Cores are robust. Furthermore, its memory subsystem is powerful. Together, these features consequently provide exceptional performance for various AI and High-Performance Computing (HPC) applications, making it a powerful deep learning hardware component.

Memory, Interconnects, and Resource Management in AI Acceleration GPU Architectures

NVIDIA’s GPUs, specifically, integrate High Bandwidth Memory (HBM), including examples like HBM2 and HBM3. This memory technology provides much higher data transfer rates. It, therefore, significantly outperforms traditional GDDR memory. Consequently, it effectively eliminates bottlenecks in data-intensive AI workloads. Fast access to large HBM datasets is, indeed, crucial; this keeps Tensor Cores active and fed. Moreover, it is vital during the training of colossal AI models, proving essential for robust AI hardware platforms.

NVIDIA developed NVLink for scaling AI computations. This high-speed interconnect, moreover, allows GPUs to communicate directly, achieving much higher bandwidths than standard PCIe connections. NVLink, thus, enables seamless scaling for huge AI clusters, allowing hundreds or even thousands of GPUs to work together on one massive model. Furthermore, Multi-Instance GPU (MIG) optimizes resource use. GPUs like the A100 and H100, for instance, support MIG. It partitions a single GPU into multiple isolated instances, with each instance gaining its own memory and compute paths. This is, consequently, especially useful in multi-tenant cloud environments, where different users or tasks efficiently share one physical GPU, enhancing overall GPU AI capabilities.

The Unifying Software Ecosystem for AI Hardware Platforms: CUDA and Beyond

NVIDIA’s software ecosystem is arguably as critical as its hardware for driving AI GPU innovation. CUDA, for example, is a parallel computing platform and programming model. It, specifically, gives developers direct access to the GPU’s instruction set. Building on CUDA, libraries like cuDNN accelerate deep learning primitives. TensorRT, moreover, optimizes AI models for inference, thus ensuring maximum throughput and minimal latency. NVIDIA also recently introduced NIM (NVIDIA Inference Microservices), which provides pre-built, production-ready microservices to deploy generative AI models. Ultimately, this robust software stack helps developers fully leverage hardware capabilities efficiently. This, therefore, makes NVIDIA’s platform highly accessible and powerful, solidifying its position among leading AI hardware platforms.

Performance statistics, indeed, highlight NVIDIA’s significant impact on AI Acceleration GPU Architectures. The H100 GPU, for example, delivers up to 6x faster AI training compared to its predecessor, the A100. Furthermore, cloud instances, such as Azure ND H100 v5 VMs, utilize H100 GPUs, thereby showing up to a 2x speedup in large language model inference. This, consequently, demonstrates substantial real-world efficiency gains, pushing the boundaries of next-gen AI processing.

AMD’s Rising Star in AI Acceleration GPU Architectures

AMD actively expands its presence in AI acceleration. It, consequently, challenges established players. AMD employs innovative GPU architectures, fostering significant AI GPU innovation. It, moreover, promotes an open software philosophy. Its strategy, therefore, targets a broad spectrum, from consumer-grade AI applications to high-end data center solutions, making its AI hardware platforms increasingly competitive.

RDNA and CDNA: Two AI GPU Innovation Architectures for Diverse Needs

AMD strategically employs two distinct GPU architectures for AI Acceleration GPU Architectures. First, RDNA serves consumer graphics cards and client AI workloads. Second, CDNA, in contrast, specifically targets data center AI and HPC, becoming a core deep learning hardware solution. The Radeon RX 9000 series features RDNA 4. It, furthermore, incorporates second-generation AI Accelerators. These significantly boost performance, offering over 4x more AI compute than prior RDNA 3 chips. In addition, they support new data types and sparsity, reaching up to 1557 TOPs of performance. This, consequently, greatly improves AI inference on desktops. RDNA 4, moreover, enhances client-side AI inferencing, enabling more advanced on-device AI. The architecture, ultimately, delivers up to 8x integer and 4x FP16 operation per cycle improvement with sparsity, thus strongly focusing on efficiency and driving next-gen AI processing.

For the data center, AMD’s CDNA architecture powers the Instinct MI series, representing a major step in AI GPU innovation. The Instinct MI350 series, for example, uses Matrix Core Technologies. These are, indeed, similar to NVIDIA’s Tensor Cores, accelerating matrix operations essential for deep learning. Matrix Cores, moreover, support many precision capabilities, including FP4, FP6, FP8, and FP64. This versatility, consequently, allows the MI350 to handle intensive AI training and demanding HPC applications. Therefore, it offers remarkable flexibility for diverse computational tasks and robust GPU AI capabilities.

High-Density Memory and Scalable Solutions for AI Acceleration GPU Architectures

The MI350 series, furthermore, features impressive memory. It includes up to 288GB of HBM3E per module. It also, in addition, offers an astonishing memory bandwidth of up to 8TB/s. This immense capacity and bandwidth are, indeed, engineered for huge generative AI models. Moreover, they support complex scientific workloads, where memory access speed and volume are key, enabling advanced neural network acceleration.

Looking ahead, AMD’s Helios rack-scale AI solution is coming, promising even greater GPU AI capabilities. Helios features MI450 GPUs built on CDNA4 architecture. It, consequently, aims for leadership in large-scale training and distributed inference. The MI450, moreover, will offer up to 432 GB of HBM4 memory per GPU. It will, furthermore, provide nearly 20 TB/s of memory bandwidth, pushing boundaries in memory-intensive AI. This level of memory and bandwidth is, indeed, crucial for scaling next-generation AI models, which continue to grow in complexity and parameter count, emphasizing the evolving landscape of AI Acceleration GPU Architectures.

ROCm: An Open Ecosystem for AI GPU Innovation

AMD commits strongly to an open software ecosystem. The ROCm open software platform, indeed, embodies this philosophy. ROCm offers wide compatibility with major machine learning frameworks; for example, it supports PyTorch and TensorFlow. This, consequently, fosters an environment where developers can migrate and build AI applications freely. Furthermore, they avoid proprietary stack lock-in. This openness, moreover, differentiates AMD significantly, attracting developers and researchers who prioritize flexibility and community collaboration, contributing greatly to AI GPU innovation.

Performance figures, indeed, highlight AMD’s rapid progress in deep learning hardware. The MI350 series, for instance, provides a 4x generational increase in AI compute compared to its predecessor, the MI250 series. It also, moreover, shows a staggering 35x leap in inferencing performance. These numbers, consequently, demonstrate AMD’s serious intent to dominate the AI acceleration market, showcasing significant advancements in GPU AI capabilities.

Intel’s Comprehensive Portfolio of AI Acceleration GPU Architectures

Intel, traditionally known for CPUs, has, however, diversified its AI acceleration offerings. It provides numerous solutions, ranging from integrated accelerators to purpose-built AI chips. Its approach, therefore, caters to varied AI market segments. Specifically, this includes edge devices and enterprise data centers, bolstering its array of AI hardware platforms.

Xe Architecture and XMX AI Engines for Next-Gen AI Processing

Intel’s Arc Pro GPUs, for instance, utilize the second-generation Xe architecture (Xe2). They integrate XMX (Xe Matrix eXtensions) AI engines. These engines, consequently, optimize for AI inference and handle creative workloads. As a result, they offer a robust solution for professionals who need AI acceleration on their workstations. The Xe architecture, moreover, aims to bring AI closer to users, enhancing productivity. Furthermore, it enables new AI-powered applications, including those for design and content creation, marking significant progress in next-gen AI processing.

Gaudi: A Dedicated AI Accelerator for Deep Learning Hardware

Intel’s Gaudi AI accelerators are purpose-built to compete directly with high-end GPUs, including those from NVIDIA and AMD. This, indeed, includes NVIDIA and AMD products in the data center, pushing the envelope for deep learning hardware. For instance, the third-generation Gaudi 3 competes directly with NVIDIA’s H100. Gaudi 3 is engineered for large model training. It also, furthermore, offers high-efficiency inference, boasting significant improvements in compute, memory, and networking. Unlike general-purpose GPUs, moreover, Gaudi accelerators focus exclusively on AI workloads, featuring a matrix multiplication engine, dedicated tensor processors, and integrated Ethernet networking.

Intel claims Gaudi 3 trains certain AI models 1.7x faster. It is also, in addition, 1.5x more efficient at running software than the H100. This, indeed, underscores its competitive performance in key AI benchmarks. Consequently, this specialized design enables Gaudi to achieve high throughput and ensures efficiency for specific AI tasks, contributing to the broader field of AI Acceleration GPU Architectures.

Integrated AI Accelerators and Flexible Solutions for AI Hardware Platforms

Beyond dedicated GPUs and Gaudi accelerators, Intel, furthermore, offers more. It provides integrated AI accelerators within select Xeon processors. These integrated capabilities, consequently, enable AI inference directly on the CPU. This suits lighter workloads or scenarios where a dedicated GPU might be excessive. Moreover, Intel utilizes FPGAs (Field-Programmable Gate Arrays). These provide flexible, efficient AI at the edge. FPGAs, indeed, offer reconfigurability, allowing developers to customize hardware logic for specific AI models. This is, therefore, highly advantageous for specialized, low-latency edge inference, expanding the versatility of AI hardware platforms.

The Unified Software Approach for AI Hardware Platforms: OpenVINO and OneAPI

Intel, moreover, supports its hardware with a comprehensive software stack, crucial for effective AI Acceleration GPU Architectures. The OpenVINO toolkit, for example, is widely popular. It optimizes and deploys AI inference models across various Intel hardware, including CPUs, GPUs, FPGAs, and VPUs. OneAPI, furthermore, is Intel’s broad initiative, providing a unified, open, and standards-based programming model that spans different architectures. Consequently, developers can write code once and deploy it across Intel’s heterogeneous hardware. This, ultimately, simplifies development and maximizes hardware utilization for varied AI workloads, enabling robust deep learning hardware solutions.

Overarching Trends Shaping AI Acceleration GPU Architectures

Beyond individual manufacturers’ innovations, broad trends exist. These, indeed, collectively push AI acceleration boundaries. These overarching trends, moreover, dictate future AI Acceleration GPU Architectures. They, furthermore, shape the broader AI hardware ecosystem, highlighting the critical role of AI GPU innovation.

Enhanced Parallel Processing Capabilities in AI Acceleration GPU Architectures

GPUs’ inherent strength, indeed, lies in massive parallelism. New architectures, consequently, continually refine and expand this capability. Modern GPUs pack more processing cores, with each core executing multiple threads simultaneously. This, therefore, allows them to handle growing AI model parameters and manage computations with greater efficiency. The design increasingly focuses on specialized instruction sets and execution units to perform AI-specific operations. Matrix multiplication, for instance, runs at unprecedented speeds. This, moreover, ensures optimal utilization of thousands of smaller cores, thus meeting AI’s unique demands. Ultimately, GPUs become the ultimate parallel processors for neural networks, defining modern AI Acceleration GPU Architectures.

Memory Innovations: Breaking the “Memory Wall”

The “memory wall” is, indeed, a persistent challenge, referring to slow data transfer between processor and memory. This bottleneck especially affects high-performance computing and AI. However, new AI Acceleration GPU Architectures address this directly, featuring continuous memory innovations. High-Bandwidth Memory (HBM), for instance, leads the way. Advancements from HBM2 to HBM3 and HBM4 drastically increase capacity and bandwidth. These improvements are, furthermore, crucial for handling immense datasets and aiding large AI model parameters. Similarly, unified memory architectures also gain traction, simplifying data management as CPUs and GPUs share a single address space. This, consequently, reduces data movement overhead, thereby improving overall system efficiency. The ultimate goal is, therefore, to ensure processing units are never idle, but rather fed data at lightning speed, a key aspect of next-gen AI processing.

The Rise of Heterogeneous Computing

AI computing’s future is, undoubtedly, heterogeneous. Systems, consequently, integrate various processing units, including CPUs, GPUs, TPUs, FPGAs, and NPUs. This, indeed, leverages their unique strengths. CPUs, for instance, manage control flow and general tasks. Meanwhile, GPUs handle parallel matrix operations, showcasing their core GPU AI capabilities. TPUs and FPGAs, moreover, offer highly optimized and customizable acceleration for specific AI tasks. This integrated approach, therefore, yields optimal performance. It also boosts efficiency across diverse AI workloads, ranging from complex model training to efficient edge inference, representing the evolution of AI hardware platforms.

Focus on Energy Efficiency and Sustainability

As AI models grow significantly in complexity and scale, their energy consumption, consequently, rises. Energy efficiency is, therefore, now a major focus for future AI Acceleration GPU Architectatures. Manufacturers, indeed, use more efficient power management systems. They, furthermore, employ finer manufacturing processes to reduce power leakage. In addition, they explore novel cooling solutions. The push for “green AI,” consequently, not only reduces operational costs but also addresses sustainability concerns of large-scale AI. Innovations include, for instance, dynamic voltage and frequency scaling, specialized low-power compute modes, and even architecture-level optimizations to minimize energy per computation. This is, therefore, a critical factor impacting both economic viability and environmental responsibility in the AI era, and a key aspect of AI GPU innovation.

Evolving Software Ecosystems and Abstraction Layers

Robust software platforms are, therefore, indispensable for realizing the full potential of advanced GPU hardware and maximizing GPU AI capabilities. These ecosystems, indeed, provide essential tools, libraries, and frameworks, such as NVIDIA CUDA, AMD ROCm, and Intel OneAPI, enabling developers to interact with hardware efficiently. Ongoing software development efforts, moreover, involve:

- Optimization: Continuously fine-tuning libraries and compilers to extract maximum performance from new hardware.

- Cross-Platform Support: Creating more open, interoperable software stacks to reduce vendor lock-in.

- AI-Based Automation: Using AI to optimize resource allocation, scheduling, and model deployment on complex heterogeneous systems.

Ultimately, these sophisticated software layers abstract hardware complexity, allowing AI researchers and developers to focus on model innovation and, consequently, avoid low-level programming. This significantly accelerates AI development, proving crucial for effective deep learning hardware.

Addressing the Challenges in Advanced AI Acceleration

Despite rapid advancements, AI acceleration, however, faces significant hurdles. The path to seamless, ubiquitous AI is, indeed, challenging. These issues, consequently, require concerted efforts. Hardware manufacturers, software developers, and cloud providers must, therefore, collaborate effectively, especially concerning evolving AI Acceleration GPU Architectures.

Managing Heterogeneous GPU Architectures

GPU architectures are diverse, encompassing different generations and vendors (e.g., NVIDIA H100s, A100s, RTX 4090s, AMD MI350s, Intel Gaudi 3s). Consequently, this creates significant management complexity for developers and administrators, who contend with varied programming models, memory configurations, and unique performance characteristics of various AI hardware platforms. This complexity, moreover, leads to challenges in:

- Compilation: Optimizing AI models for specific architectures, which often requires specialized compilers and libraries.

- Scheduling: Efficiently allocating diverse GPU resources within a cluster.

- Resource Maximization: Fully utilizing each GPU and preventing idling due to compatibility or inefficient task distribution.

These factors, therefore, increase deployment costs and prolong development cycles, necessitating more flexible, unified software layers for effective AI Acceleration GPU Architectures.

Network Bandwidth and Latency for Scalable AI Clusters

AI models are increasingly massive, requiring hundreds or even thousands of GPUs working together. However, the network connecting these GPUs, consequently, becomes a critical bottleneck for next-gen AI processing. Network bandwidth and latency, indeed, limit AI cluster scalability. If data supply to GPUs is inefficient, compute units sit idle, as a result, wasting valuable processing power. This is, moreover, especially true for distributed training of large models, where frequent parameter synchronization is vital. It, therefore, demands extremely low-latency, high-bandwidth interconnects. Technologies like NVLink and InfiniBand are essential; nevertheless, even these face challenges from modern AI workload scale. Therefore, continued innovation in network fabrics and protocols is crucial for advancing AI hardware platforms.

The Sheer Demand for Memory and Bandwidth in Generative AI

Generative AI models, specifically, demand immense memory and bandwidth. This is, indeed, especially true for Large Language Models (LLMs), which have billions or even trillions of parameters. These models, consequently, need vast memory to store parameters. Furthermore, they require extremely high bandwidth to load and process parameters during training and inference. The “memory wall,” therefore, intensifies. This challenge underscores the critical need for continued memory innovation, including higher HBM densities and faster memory interfaces. Moreover, novel in-memory computing paradigms could be explored, where processing occurs directly within or near memory modules. This, as a result, significantly reduces data movement, optimizing AI Acceleration GPU Architectures for future demands.

Performance Metrics and Market Outlook

Architectural advancements, therefore, dramatically impact performance metrics. They, furthermore, significantly influence market projections, particularly for AI Acceleration GPU Architectures.

NVIDIA’s H100 GPU, for example, delivers up to 6x faster AI training performance compared to its A100 predecessor. Furthermore, specialized cloud offerings, such as Azure ND H100 v5 VMs, integrate H100 GPUs, thereby showing up to a 2x speedup in LLM inference and demonstrating substantial real-world efficiency gains, showcasing superior GPU AI capabilities.

Meanwhile, AMD’s MI350 series boasts a remarkable 4x generational increase in AI compute compared to its MI250 predecessor, alongside an impressive 35x leap in inferencing performance. Its RDNA 4 architecture delivers up to 8x integer and 4x FP16 operation per cycle improvement with sparsity, marking a new era of AI GPU innovation. Intel’s Gaudi 3 stands as a formidable contender, training certain AI models 1.7x faster and running software 1.5x more efficiently than the H100. This underscores fierce competition and rapid innovation in deep learning hardware.

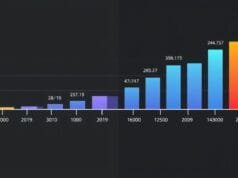

General industry projections are overwhelmingly positive. AI accelerators could deliver a 300% performance improvement over traditional GPUs by 2025. This rapid escalation fuels explosive market growth. The global GPU industry, valued at $37.02 billion in 2022, projects a robust 12% Compound Annual Growth Rate (CAGR) from 2023 to 2030, driven by pervasive AI integration across industries, fundamentally transforming AI hardware platforms.

Conclusion

The AI acceleration revolution, indeed, links tightly to innovation in new AI Acceleration GPU Architectures. Manufacturers like NVIDIA, AMD, and Intel push boundaries, fostering further AI GPU innovation. They, furthermore, integrate specialized processing units such as Tensor Cores, Matrix Cores, and XMX engines, key components for deep learning hardware. Moreover, advanced memory subsystems like HBM and high-speed interconnects like NVLink are crucial. Their robust software ecosystems – CUDA, ROCm, and OneAPI – act as force multipliers, enabling developers to harness immense computational power and achieve superior GPU AI capabilities.

NVIDIA, for instance, leads with its established platforms. However, AMD rapidly gains ground with powerful new architectures and an open ecosystem. Intel, similarly, makes significant strides; its portfolio includes dedicated AI accelerators and integrated solutions, all contributing to the advancement of AI hardware platforms. The collective trend, consequently, shows increasingly powerful, energy-efficient, and versatile GPUs, all supported by sophisticated software layers. Addressing challenges like heterogeneity, network scalability, and memory demands remains vital for the future of AI Acceleration GPU Architectures. As AI models grow in complexity and scale, new AI Acceleration GPU Architectures will, therefore, remain at the forefront. They will, ultimately, fuel the next generation of AI innovation, thereby transforming virtually every sector of the global economy, defining next-gen AI processing.