Real-time ray tracing breakthroughs are dramatically transforming the digital landscape. This rendering technique simulates light’s physical behavior, creating stunningly realistic visuals. Once a niche tool for high-budget films, it has now entered the mainstream. Consequently, it fundamentally reshapes how we experience interactive 3D graphics, particularly in video games. Furthermore, ray tracing finds critical applications across diverse industries. This article explores its remarkable journey, from historical roots to cutting-edge AI and hardware innovations. We will also examine its transformative impact and exciting future.

The quest to render light and shadow realistically in art and design dates back centuries. For instance, Albrecht Dürer’s 16th-century work on perspective explored how light rays interact with a viewing plane to form an image. This early artistic understanding, therefore, provided a foundational blueprint for later developments. However, the true ambition of computationally simulating light only began taking shape with the advent of computers, paving the way for eventual real-time light simulation.

In 1968, the computational application of ray tracing began. Specifically, Arthur Appel published a seminal paper on shading machine renderings, introducing the core concept of tracing lines from a viewpoint through pixels to determine surface properties. This marked a crucial initial step; however, the method remained nascent, awaiting further ray tracing advancements. Indeed, it lacked the sophistication needed for genuine realism until further advancements emerged.

A significant breakthrough, moreover, occurred in 1980 when Turner Whitted introduced recursive ray tracing. His groundbreaking algorithm enabled the realistic simulation of complex optical phenomena, including accurate reflections, refractions, and shadows—features previously difficult or impossible to achieve computationally. Whitted’s method, in fact, traced rays beyond the initial object hit, subsequently generating new rays towards light sources for shadows and and new rays towards other objects for reflections and refractions. This recursive approach consequently delivered an unprecedented level of visual fidelity, foreshadowing modern photorealistic rendering tech.

Early Challenges and the Foundation for Real-time Ray Tracing Breakthroughs

For many decades, despite Whitted’s innovations, ray tracing remained largely confined to offline rendering. The immense computational power required to simulate millions, or even billions, of light rays for a single image meant rendering times could stretch for days or even weeks. This processing bottleneck, therefore, severely limited its widespread, real-time ray tracing breakthroughs.

Consequently, industries like film and television visual effects became its primary domain. These sectors, notably with their generous budgets and longer timelines, could readily accommodate extensive processing. Companies such as Pixar and Industrial Light & Magic, for instance, expertly leveraged ray tracing to create breathtakingly realistic scenes for blockbuster movies, prioritizing ultimate visual quality over rendering speed, before significant GPU ray tracing progress enabled interactivity.

Industry-Wide Adoption Driven by Ray Tracing Advancements

The dream of interactive, real-time ray tracing breakthroughs, therefore, became a reality around 2018. This monumental shift was driven by dedicated hardware acceleration in commercial graphics cards, fundamentally changing the landscape of computer graphics.

NVIDIA’s Turing architecture, for example, marked a pivotal moment. Unveiled in its GeForce RTX series GPUs, this architecture introduced specialized “RT Cores.” These dedicated processing units efficiently handle the most intensive ray tracing calculations, specifically bounding volume hierarchy (BVH) traversal and ray-object intersection tests, representing a major step in ray tracing advancements.

Bounding Volume Hierarchies (BVH), in essence, are crucial data structures. They organize objects in a 3D scene like nested boxes, ranging from large containers to individual items. RT Cores efficiently traverse this BVH when a ray enters the scene. This method, consequently, significantly reduces the number of necessary intersection tests by only checking objects within the boxes the ray intersects, bolstering real-time light simulation.

Furthermore, RT Cores excel at precise ray-object intersection tests, pinpointing where a light ray strikes a surface. By offloading these complex, repetitive computations to dedicated hardware, the main GPU shaders are freed for other rendering tasks. This parallel processing capability, therefore, finally enabled interactive light rendering on consumer-grade hardware.

Software Innovations and Algorithmic Optimizations: Powering Real-time Ray Tracing Breakthroughs

Following NVIDIA’s groundbreaking efforts, other major semiconductor players quickly integrated hardware-accelerated ray tracing. AMD, for instance, incorporated this technology into its RDNA 2 and RDNA 3 architectures, powering its Radeon RX series GPUs. Additionally, it extended to next-generation consoles like the PlayStation 5 and Xbox Series X/S. Intel, moreover, also equipped its Arc series graphics cards with GPU ray tracing progress.

Beyond desktop and console, moreover, Arm and Qualcomm have been developing ray tracing solutions for their mobile GPU architectures. This indicates a widespread industry push to make the technology accessible across a much broader range of devices, from high-end PCs to smartphones. Ultimately, this rapid adoption underscores the significant potential and importance of continuous ray tracing advancements.

While hardware acceleration provides raw power for real-time ray tracing breakthroughs, software advancements and smart algorithms are equally vital. These innovations, crucially, work in synergy with hardware to boost efficiency and improve visual quality. Consequently, they manage intense computational demands, enabling ray tracing integration without sacrificing performance. This ensures breathtaking graphics become a practical reality for achieving real-time ray tracing breakthroughs.

Unleashing Unparalleled Visual Realism with Photorealistic Rendering Tech with Accessible GPU Ray Tracing Progress

A cornerstone of software optimization, for example, involves refining spatial data structures. Bounding Volume Hierarchies (BVH), for instance, remain essential as they efficiently organize complex 3D scenes. This, in turn, enables ray tracers to quickly identify potential object intersections, significantly reducing calculations. Both BVH and KD-trees are critical, therefore, for accelerating the ray-object intersection phase, contributing to cutting-edge photorealistic rendering tech.

Recently, Artificial Intelligence (AI) and Machine Learning (ML) have delivered impactful advancements. Ray tracing, even with dedicated hardware, demands immense computation for clean images. Tracing too few rays creates noisy visuals; conversely, enough rays are too slow for real-time. Here, AI-driven denoising algorithms—like NVIDIA’s Deep Learning Super Sampling (DLSS) and AMD’s FidelityFX Super Resolution (FSR)—offer breakthrough solutions. These techniques, ultimately, make real-time ray tracing breakthroughs far more practical and performant, further solidifying real-time light simulation.

Gaming: Real-time Ray Tracing Breakthroughs for Ultimate Immersion

Furthermore, the standardization of graphics APIs has made ray tracing more accessible to developers. Microsoft’s DirectX Raytracing (DXR), for example, offers a crucial framework for integrating ray tracing into Windows applications and games. Similarly, the built-in ray tracing capabilities of major game engines like Unreal Engine and Unity have empowered countless creators. These engines provide high-level tools and abstractions; consequently, developers can harness ray tracing without writing complex low-level code. This increased accessibility has significantly accelerated its adoption and innovation throughout the industry, driving new real-time ray tracing breakthroughs.

The Distinctive Power of Interactive Light Rendering

Ray tracing, fundamentally, revolutionizes visual realism by precisely simulating how light behaves physically. Unlike traditional rasterization, which relies on approximations like shadow maps and screen-space reflections, ray tracing offers unparalleled fidelity. For decades, rasterization efficiently projected 3D models onto a 2D screen, but its tricks often lacked true physical accuracy. This frequently led to visual artifacts, unrealistic lighting, and static environments. Ray tracing, conversely, overcomes these limitations, driving its widespread adoption and excitement across industries due to interactive light rendering.

At its core, moreover, ray tracing employs a fundamentally different principle. It meticulously simulates how individual light rays interact within a scene. Imagine rays cast from a virtual camera, traveling into the 3D environment. These rays then bounce off surfaces, absorbing, reflecting, or refracting light. This physically based simulation, therefore, unlocks a range of dynamic and accurate optical effects, transforming how we perceive digital worlds, a testament to ray tracing advancements.

Transformative Impact Across Industries: Ushering in an Era of Ray Tracing Advancements

Ray tracing, primarily, delivers lifelike reflections and refractions, mirroring surroundings with incredible precision. Highly polished floors, metallic surfaces, and even water puddles dynamically reflect environments with detailed images. Furthermore, transparent materials like glass or ice accurately refract and distort light, consequently creating convincing optical effects and caustics. Beyond reflections, ray tracing also naturally generates soft and accurate shadows. Their softness dynamically adjusts based on light source characteristics and object distance, ensuring dynamic shadows unlike older static methods, thanks to continuous real-time light simulation.

Moreover, Global Illumination (GI) stands out as a most impactful capability, simulating indirect lighting. It illustrates how light bounces off surfaces to illuminate other scene parts; for example, a vibrant red wall subtly casts a red tint onto an adjacent white wall. This crucial indirect lighting, therefore, dramatically enhances a scene’s ambiance and realism. By moving beyond simplistic approximations to direct light simulation, ray tracing creates environments where light behaves authentically. This, ultimately, enables advanced interactions, contributing to a richer, more tangible, and believable visual experience powered by photorealistic rendering tech.

Sustaining and Expanding Ray Tracing Advancements Through Real-time Ray Tracing Breakthroughs

The profound visual fidelity offered by real-time ray tracing breakthroughs is not merely an aesthetic upgrade; indeed, it’s a transformative capability impacting multiple sectors. Its ability to render light with unprecedented accuracy is redefining expectations and unlocking new possibilities across various professional and entertainment domains.

Advancing Ray Tracing Capabilities: Pioneering Real-time Light Simulation

No industry, arguably, has embraced and showcased real-time ray tracing breakthroughs quite like gaming. Ray tracing has elevated gaming realism to unprecedented levels, offering deeply immersive experiences with dynamic and accurate lighting, reflections, and shadows. Games like Cyberpunk 2077, Control, Metro Exodus Enhanced Edition, and Spider-Man: Miles Morales, for example, utilize ray tracing to create visually stunning worlds that feel more alive and responsive to light. From wet city streets reflecting neon signs to subtle indoor lighting nuances, ray tracing adds a layer of depth and atmosphere that was previously unattainable.

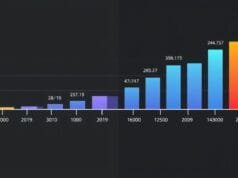

The adoption rates, moreover, clearly demonstrate this significant shift. NVIDIA reported a substantial increase in ray tracing enablement among its users; for instance, 83% of RTX 40 series users enabled ray tracing, a significant jump from 37% for the RTX 20 series in 2018. This trend, consequently, highlights an accelerating movement towards embracing ray tracing as a standard feature in mainstream gaming, driven by sustained GPU ray tracing progress.

Film and Visual Effects (VFX): Accelerating Creativity

While film and VFX industries have long relied on ray tracing for offline rendering, real-time capabilities are now streamlining and accelerating production workflows. Filmmakers and VFX artists, in fact, can use real-time ray tracing breakthroughs for on-set visualization and pre-visualization. This means they can see high-fidelity renders of digital assets integrated into live-action footage instantaneously. Consequently, they no longer have to wait hours or days for test renders, which enables quicker iterations, more informed creative decisions, and ultimately, faster production cycles. This agility, therefore, fosters greater creativity and efficiency, allowing artists to experiment more freely with lighting and scene composition.

Architecture and Product Design: Real-time Photorealism

For architects and product designers, ray tracing is, indeed, a game-changer. They can now create photorealistic renders of their projects with unparalleled speed and accuracy. This, in turn, allows them to visualize buildings, interiors, and products in exquisite detail, demonstrating how light interacts with various materials, textures, and spatial arrangements. More importantly, interactive light rendering enables interactive, real-time walkthroughs. Clients, therefore, can virtually explore a proposed building or examine a product from all angles, experiencing it with a level of realism that static images or traditional renders cannot provide. This significantly enhances client communication and decision-making, reducing the need for costly physical prototypes or redesigns.

Other Applications: Beyond the Visual Spectrum

The principles of ray tracing, notably, extend beyond mere visual rendering. Its simulation capabilities are, moreover, being explored for other innovative applications. One fascinating area, for instance, is immersive sound design. By simulating sound wave paths within a virtual environment, ray tracing can accurately model how sound bounces, attenuates, and reverberates. This, consequently, can create more realistic and spatially accurate audio experiences, enhancing immersion in virtual reality, games, and architectural acoustics. The method, furthermore, holds potential in various fields, from scientific visualization to advanced simulation, wherever the accurate tracking of paths through a complex environment is required, showcasing continued ray tracing advancements.

Challenges and The Road Ahead: Pushing the Boundaries of Light

Real-time ray tracing breakthroughs, while offering incredible visual fidelity, still grapple with significant challenges. Researchers and engineers, however, actively tackle these hurdles, pushing the boundaries for future implementations. Foremost, ray tracing demands immense computational power. Even with specialized hardware like RT Cores and advanced software optimizations, its real-time implementation remains exceptionally demanding. Integrating comprehensive ray tracing can, moreover, halve performance compared to traditional rasterization. This overhead, consequently, requires expensive, powerful hardware and restricts access. In addition, power consumption presents another major concern, as high-performance GPUs require substantial energy.

This substantial energy consumption, furthermore, impacts large-scale render farms and reduces battery life on mobile devices. The industry also faces several computational bottlenecks; for example, shader complexity and memory bandwidth often limit the rendering pipeline despite accelerated BVH traversal. Additionally, recurring supply constraints for high-end GPUs hinder broader adoption. While DXR and Vulkan Ray Tracing help mitigate API fragmentation, developers, nevertheless, still optimize for diverse hardware, adding development hurdles, even as real-time light simulation becomes more prevalent.

Advancing Ray Tracing Capabilities

Despite current hurdles, ray tracing’s future looks incredibly promising. Anticipated hardware advancements, including more powerful GPUs and specialized chips, will enhance efficiency and performance. Moreover, AI integration will deepen considerably, with advanced denoising and neural rendering techniques dramatically reducing necessary rays while maintaining visual fidelity, driving further ray tracing advancements. Software standardization will also evolve, simplifying implementation and increasing accessibility. The most immediate and significant trend, however, is the widespread adoption of hybrid rendering models. These expertly combine rasterization’s efficiency with ray tracing’s accuracy for specific effects; for example, rasterization handles geometry, while ray tracing focuses on global illumination or reflections. This pragmatic approach, consequently, balances visual fidelity and performance effectively, ultimately leading to more real-time ray tracing breakthroughs.