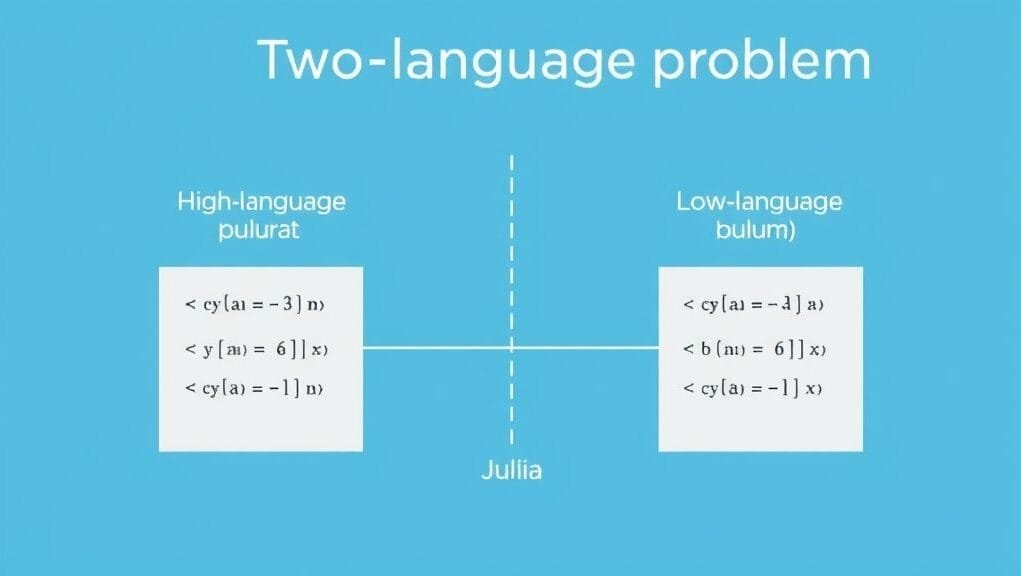

Have you ever felt caught between two worlds? On one side, you have easy-to-use languages like Python and R, which are excellent for quickly trying out ideas and analyzing data. On the other side are very fast languages like C and Fortran, essential for large-scale calculations in complex simulations. For years, scientists and engineers faced a tough dilemma: either sacrificing speed for ease of use, or spending many hours rewriting performance-critical code in a harder language. This fundamental challenge, known as the “two-language problem,” has long hindered scientific computing.

But what if there was a better way? Imagine a programming language that offers the best of both worlds: combining the blazing speed of compiled code with the intuitive design and rapid development of a high-level language. That’s precisely the promise of Julia. It’s not just any programming language; it’s a carefully crafted tool, designed from scratch to revolutionize how we tackle complex scientific problems, machine learning, and data analysis. If you’re facing slow computations or simply seeking a more efficient approach, understanding Julia scientific computing could significantly benefit your work.

Bridging the Divide: Why Julia Matters for Scientific Computing

For decades, the conventional approach in scientific research involved initially developing algorithms in a flexible, high-level language. Languages such as Python, R, or MATLAB are highly expressive, enabling researchers to prototype and test ideas rapidly. They also offer robust libraries and straightforward syntax, facilitating discovery. However, when these initial implementations had to handle vast amounts of data or run large-scale simulations, their performance often became a significant bottleneck.

This is precisely where the “two-language problem” emerged. To achieve the necessary speed, scientists would then meticulously translate their performance-critical code into a compiled language like C or Fortran. This process not only consumed considerable time and introduced errors; it also created a disconnect between code development and deployment. Moreover, it often required a distinct set of skills, adding significant overhead. This consequently slowed down research and increased costs.

The Promise of One Language, Two Worlds for Julia Scientific Computing

Julia was created specifically to eliminate this difficult trade-off. Essentially, its creators envisioned a language that could serve as both a rapid development tool and a high-performance execution engine. They aimed to allow researchers to write their entire program, from initial conception to final deployment, in a single, consistent language. This vision directly addresses the shortcomings of the traditional approach to Julia scientific computing.

By offering speed comparable to C and Fortran, while retaining the ease of use found in languages like Python, Julia allows you to focus purely on the science. You don’t need to switch between coding styles. This unified approach significantly simplifies your workflow. Moreover, it enables quicker development, as you can optimize your code for speed without a complete rewrite. Ultimately, Julia excels in scientific computing applications.

Unpacking Julia’s Speed Secret: Just-In-Time Compilation

At the heart of Julia’s impressive speed lies its unique approach to code execution: Just-In-Time (JIT) compilation. Interpreted languages, for instance, translate code line by line, while compiled languages build everything before running. Julia, however, employs a clever hybrid. When you run a Julia program, the code isn’t immediately transformed into a ready-to-run executable. Instead, it’s compiled into fast machine code only when it’s invoked, during runtime. This process is crucial for high-performance Julia scientific computing.

This dynamic compilation process is exceptionally powerful. In essence, it allows Julia to analyze the exact types of data your functions are handling. It then generates highly optimized machine code specifically for those data types. As a result, Julia programs can run almost at the speed of the underlying hardware, matching languages traditionally considered much quicker. This is particularly vital for Julia scientific computing, where complex simulations, numerical tasks, and large-scale data operations demand maximum speed.

The LLVM Advantage

Julia achieves its JIT compilation prowess through its reliance on LLVM. Essentially, LLVM is a collection of flexible and reusable compiler components and tools, serving as the core infrastructure that provides a robust system for generating fast machine code. When you run a Julia function for the first time with specific argument types, Julia’s LLVM-based compiler springs into action, transforming that exact function into highly optimized machine code.

This optimized code is then saved for later. Consequently, the next time you call the same function with identical argument types, Julia doesn’t need to recompile it; it simply executes the already optimized code. This intelligent caching mechanism is a primary reason for Julia’s ability to deliver consistent high performance after the initial compilation, greatly benefiting Julia scientific computing.

Beyond Initial Latency: Sustained Performance

One common concern raised about JIT-compiled languages, including Julia, is the phenomenon often referred to as “time to first plot” (TTFP) or “start-up delay.” This refers to the brief delay you might observe the very first time a new function or script is executed. During this initial run, the JIT compiler is actively working: it analyzes your code, generates, and then caches the optimized machine code. As a result, this can feel slower compared to an interpreted language, which starts instantly but executes more slowly.

However, it’s crucial to understand that this delay is a one-time cost for each function and data type within a given session. Once a function has been compiled and cached, subsequent calls to it are exceptionally fast. Therefore, for long-running simulations, repeated analyses, or programs where functions are invoked frequently, this initial latency effectively becomes negligible. Overall, the sustained, high-speed performance across numerous tasks more than compensates for this initial compilation time, making Julia an excellent choice for computationally intensive tasks in Julia scientific computing.

Crafted for Scientists: An Intuitive Ecosystem for Numerical Work

Julia was not an accidental creation; it was intentionally designed with a clear purpose and specific users in mind: numerical and scientific computing. This foundational design philosophy is evident in every aspect of the language, from its syntax to its expanding set of packages. If you’re a data scientist, physicist, financial analyst, or anyone working with mathematical models and data, you’ll find Julia’s approach remarkably intuitive. This makes Julia an exceptionally user-friendly environment for scientific computing.

The language’s syntax itself is clean, concise, and often closely mirrors mathematical notation. This makes it significantly easier for those with a strong mathematical background to learn and code rapidly. For example, operations like matrix multiplication look remarkably similar to how you’d write them on paper. This, in turn, reduces cognitive load and potential for errors. Ultimately, this focus on clarity and direct expression of mathematical concepts accelerates development for Julia scientific computing applications.

A Syntax That Speaks Your Language

Imagine writing an equation and having your code replicate it almost perfectly. Julia’s design facilitates this, allowing the use of special characters and symbols; specifically, you can use symbols like $lpha$, $eta$, and even $pi$ directly in your code. This might seem like a minor detail, but for researchers who work with complex mathematical formulas daily, it greatly enhances readability. Furthermore, it minimizes errors when translating equations into plain text code. Clearly, this is a significant advantage for Julia scientific computing.

Furthermore, Julia treats functions as first-class citizens and supports strong functional programming paradigms alongside imperative ones. This flexibility allows for writing highly clear and concise code for complex algorithms, which, in turn, further reduces boilerplate and accelerates development. Its array-centric design means that working with entire arrays is natural and fast, much like in MATLAB or NumPy.

The Robust Package Ecosystem for Julia Scientific Computing

Beyond its core language features, Julia boasts a rich and rapidly growing package ecosystem. These libraries are essentially designed to address common problems in scientific computing, data analysis, machine learning, and high-performance computing. This means you don’t have to start from scratch for standard tasks; chances are, a highly optimized and well-maintained package is already available for your use in Julia scientific computing.

Here are a few prominent examples:

- DifferentialEquations.jl: A comprehensive suite for solving ordinary differential equations (ODEs), stochastic differential equations (SDEs), and differential algebraic equations (DAEs). It offers high speed and flexibility, which is crucial for many areas of Julia scientific computing.

- JuMP.jl and Optimization.jl: Powerful tools for mathematical optimization, enabling users to define and solve various types of optimization problems efficiently.

- DataFrames.jl: Provides a robust and intuitive way to manipulate and analyze tabular data, similar to pandas in Python or data.frames in R.

- Flux.jl: A flexible and high-performance machine learning library, enabling users to build and train complex neural networks within Julia.

- LinearAlgebra.jl and FFTW.jl: Core libraries for essential numerical routines. These often use highly optimized underlying C or Fortran libraries for peak performance in Julia scientific computing.

These are just a few examples illustrating the breadth and depth of Julia’s package ecosystem. As the community grows, so does the availability of specialized tools, making Julia an increasingly appealing choice across many scientific fields.

A vibrant network of interconnected packages, with arrows showing dependencies and collaborations among diverse scientific tools.

Scaling New Heights: Parallelism and GPU Computing in Julia

Modern scientific problems often demand computational power far exceeding what a single processor can provide. Consider, for instance, running climate models or training massive machine learning models. For these tasks, distributing computations across multiple cores, machines, or specialized hardware like GPUs is paramount. Fortunately, Julia was designed with this need in mind, offering built-in tools for parallelism and GPU utilization that are both powerful and remarkably easy to use. This makes Julia scientific computing ideal for large-scale tasks.

This inherent capability for parallel execution, moreover, sets Julia apart from many other languages. You don’t need to learn entirely new paradigms or libraries just to leverage multiple cores or GPUs. Instead, Julia allows you to utilize these features directly within the language. This enables you to scale your computations with relatively little effort. Overall, this represents a significant advancement for large-scale data science and high-performance computing (HPC) tasks in Julia scientific computing.

Harnessing Multi-Core Power in Julia Scientific Computing

Julia provides straightforward tools for multi-threading and distributed computing. For example, you can execute loops concurrently, run tasks in parallel, and distribute computations over multiple processes or even different computers in a cluster. This allows you to fully utilize available CPU power, greatly accelerating tasks that can be broken down into smaller, independent parts. The language, furthermore, handles much of the underlying complexity, allowing you to focus on the logic of your parallel algorithm.

For example, performing a parallel map over a large dataset or running numerous independent simulations concurrently becomes significantly easier in Julia. Its design reduces the overhead often associated with managing parallel tasks, ensuring you achieve genuine speedup, not just more complex code—a key benefit for Julia scientific computing.

Embracing the GPU Revolution

The advent of Graphics Processing Units (GPUs) has profoundly transformed scientific computing. They offer unrivaled processing power for highly parallelizable tasks. Julia, moreover, embraces this paradigm shift with first-class support for GPU computing. Libraries like CUDA.jl and AMDGPU.jl, for instance, allow you to write Julia code that runs directly on NVIDIA and AMD GPUs, respectively. This means you can leverage the immense number of cores on a GPU to significantly accelerate tasks like matrix operations, machine learning, and complex simulations. Ultimately, this makes Julia scientific computing exceptionally powerful.

The ability to seamlessly transition between CPU and GPU execution within the same language is profoundly powerful. For example, you can prototype on a CPU and then deploy on a GPU, without rewriting your entire codebase in a separate language like CUDA C++. This flexibility thus accelerates development. Furthermore, it empowers researchers to push the boundaries of what computers can traditionally accomplish. The Celeste.jl project, for instance, is a prime example; it achieved an incredible 1.5 PetaFLOP/s on a supercomputer by using Julia to process sky images. This clearly demonstrates Julia’s prowess for very large-scale HPC in Julia scientific computing.

Flexibility Through Design: Understanding Multiple Dispatch

One of Julia’s most elegant and powerful features is its multiple dispatch paradigm. If you’re accustomed to object-oriented programming with single dispatch (where the method called depends only on the type of the first argument, `self` or `this`), multiple dispatch might seem somewhat unfamiliar at first. Nevertheless, it offers significant benefits for writing flexible, fast, and reusable code, especially in scientific and mathematical contexts. In essence, it fundamentally enhances Julia scientific computing.

In Julia, when you call a function, the specific method that gets executed is determined by the types of all of its arguments, rather than just one. This selection, furthermore, occurs at runtime. Think of it, for example, like a versatile tool that adapts its operation based on all the materials you provide, not just the first one. For instance, a `multiply` function might behave differently if you provide it with two integers, two arrays, or an array and a single scalar.

How Multiple Dispatch Works

Let’s illustrate with a simple example. Imagine you have a function called `addnumbers`. If you call `addnumbers(2, 3)` (both integers), Julia might have a method tailored specifically for adding two integers. Conversely, if you call `addnumbers(2.0, 3.5)` (both floating-point numbers), another method for adding two decimal numbers would be used. Furthermore, if you call `addnumbers([1, 2], [3, 4])` (two arrays), yet another method for adding arrays would be executed, which would likely add each element separately.

The key advantage of multiple dispatch is that you, as the programmer, define these distinct behaviors for the same function name based on the types of the inputs. Julia then automatically selects the most appropriate and optimized method at runtime. Consequently, this leads to highly general and reusable code, a cornerstone of effective Julia scientific computing.

Building Adaptable and Efficient Code for Julia Scientific Computing

The benefits of multiple dispatch for scientific computing are, therefore, substantial:

- Code Composability: Firstly, it facilitates the creation of small, clear functions that can be combined in powerful ways. When you define a new type (e.g., a specialized scientific data type), you simply define how existing functions (like `+`, `-`, `*`) should operate on it. All other code that uses these functions will then automatically work with your new type without requiring modifications.

- Performance Optimization: Secondly, because Julia’s JIT compiler is aware of all argument types at runtime, it can generate highly specialized and fast machine code for each set of input types. This is a primary driver of Julia’s speed.

- Extensibility: Thirdly, it’s remarkably easy to extend existing libraries or functionalities. You don’t need to modify their original code; you simply add new methods for existing functions that operate on your new types, and the system automatically discovers them. This, in turn, fosters a vibrant and adaptable ecosystem for Julia scientific computing.

This approach, consequently, makes Julia an exceptionally powerful tool for developing numerical algorithms. Operations often need to be tailored for a multitude of data types, ranging, for example, from simple numbers to complex arrays or specialized scientific objects. Overall, multiple dispatch facilitates a flexible and high-performance coding style, which is exceptionally well-suited for the evolving and diverse landscape of scientific research with Julia scientific computing.

Julia’s Performance Edge: Benchmarks and Real-World Impact

While technical features like JIT compilation and multiple dispatch explain how Julia achieves its speed, the true validation lies in its benchmarks and real-world applications. When performance is paramount, Julia consistently excels, often delivering speed increases that directly lead to accelerated research and the ability to solve previously intractable problems. Ultimately, this underscores the power of Julia scientific computing.

Studies frequently highlight Julia’s substantial speed advantages over popular languages like Python (even with optimized libraries like NumPy) and R. For example, one study found an initial Julia model to be 13 times faster than its Python counterpart. With further, standard optimizations, this speed advantage, moreover, increased to an incredible 10-20 times faster. Clearly, these are not incremental gains; rather, they signify a transformative shift in computational efficiency for Julia scientific computing.

Outpacing the Competition in Julia Scientific Computing

Let’s delve into some direct comparisons:

- Python and R: While Python and R excel at rapid prototyping and boast vast tool ecosystems, their interpreted nature often impedes raw computational speed for large numerical tasks. Julia’s JIT compilation, however, circumvents this limitation, offering faster results for numerical-heavy code written purely in Julia.

- MATLAB: MATLAB is another widely used language in science and engineering, capable of fast execution for vectorized array operations. However, for more complex, loop-intensive calculations, Julia often outperforms it. For instance, in a benchmark involving a simple `exp()` function inside a loop, Julia was observed to be significantly faster than MATLAB.

- C and Fortran: C and Fortran are traditionally the go-to choices for raw speed in scientific computing. Julia’s design goal, however, was to approach their performance. In many numerical benchmarks, it often achieves comparable or even faster speeds, particularly when its compiler generates highly optimized code for specific types.

Case Studies in Speed

Beyond general benchmarks, real-world applications further demonstrate Julia’s speed. For example, in a compelling case study, a shallow water model with an irregular mesh was compared. The Julia code consistently ran faster than its MATLAB counterpart. Such models are critical in fields like ocean science and weather, where computational speed directly impacts the accuracy and timeliness of forecasts.

Perhaps the most compelling evidence of Julia’s capability, however, was its entry into the “petaflop club” in 2017. This exclusive group comprises systems capable of performing 10^15 floating-point operations per second. The Celeste.jl project, which processes sky images to detect and characterize celestial objects, achieved this on a supercomputer. Clearly, this remarkable feat demonstrates Julia’s scalability on the most powerful supercomputers, proving its readiness for the most challenging scientific tasks in Julia scientific computing.

To put these comparisons into perspective, consider the following simplified performance overview (actual performance varies greatly by task and implementation):

| Language | Typical Performance Profile | Notes |

|---|---|---|

| C/Fortran | Extremely Fast | Manual memory management, steep learning curve. |

| Julia | Near C/Fortran Speed | High-level syntax, JIT optimization, excellent for numerical tasks. |

| MATLAB | Fast for Vectorized Ops | Slower for loops, proprietary. |

| Python (NumPy) | Moderate Speed | Fast for NumPy operations, slower for pure Python loops. |

| R | Moderate Speed | Strong for statistics, slower for generic computation. |

Table 1: Comparative Performance Overview for Numerical Computing

Navigating the Landscape: Challenges and a Look Ahead for Julia Scientific Computing

Despite its clear technical advantages and impressive speed, Julia remains a relatively nascent language. While its growth has been remarkable, it nevertheless faces typical challenges. These challenges arise, for example, in comparison to the decades-old entrenched positions of Python and R in scientific computing. Understanding these challenges is, therefore, crucial for anyone considering Julia, as it provides a realistic view of its current standing and future trajectory.

One of the most common complaints, for instance, is the often-discussed “time to first plot” (TTFP) or start-up delay. While Julia’s JIT compilation delivers impressive speed for subsequent runs, the very first time you execute a new function or script can sometimes feel sluggish. This is essentially because the compiler is actively generating optimized machine code. For quick data explorations or very short programs, this initial delay can be noticeable. However, for long-running simulations or programs that invoke functions repeatedly, the overall benefits of sustained high performance far outweigh this initial wait. This, therefore, is a key consideration for Julia scientific computing.

Overcoming the Initial Hurdles

The Julia community, however, is actively working to mitigate TTFP. The compiler is continuously being improved. Additionally, tools like PackageCompiler.jl allow users to precompile their projects into a system image, which significantly reduces program startup times. While it may not completely disappear, the impact of TTFP is diminishing as the language and its tooling mature. Clearly, this progress is vital for broader adoption of Julia scientific computing.

Another evolving aspect of Julia, moreover, is its package ecosystem and the maturity of its community. While Julia has an active and highly engaged community, it is still smaller and less established than the vast user bases of Python and R. Consequently, this can sometimes lead to:

- Smaller Library Ecosystem: While core Julia scientific computing libraries are robust, some niche areas might have fewer packages or less comprehensive documentation compared to Python’s extensive PyPI repository.

- Varying Package Maturity: Furthermore, as with any evolving open-source ecosystem, the quality, robustness, and documentation for packages outside of Julia’s core scientific computing offerings can vary.

- Slower Adoption Rate: Finally, despite its technological superiority in many respects, Julia’s adoption rate, especially in enterprise environments, has been slower than the overall growth of scientific machine learning. Python, with its versatility and immense existing user base, remains a dominant force.

Growing Pains of a Young Language in Scientific Computing

These challenges are typical “growing pains” for any powerful new language. However, the community is vibrant, welcoming, and actively striving to enhance documentation, tooling, and package robustness. The steady influx of new users and contributors means, consequently, that the ecosystem is rapidly expanding, filling gaps and improving existing libraries for Julia scientific computing.

Interoperability with other languages is another practical consideration. Julia offers robust mechanisms for interoperability. For instance, you can invoke Python code via PyCall.jl or R code via RCall.jl. This allows you to leverage existing libraries in other languages if a native Julia equivalent isn’t yet available. However, invoking Julia functions from other languages can be more complex, requiring specific setup and knowledge of foreign function interfaces. This, furthermore, is an area where, as it matures, simpler solutions are expected to emerge over time for Julia scientific computing.

The Road to Widespread Adoption for Julia Scientific Computing

Finally, debugging and software development tools are good but still evolving compared to those of very mature languages. Consequently, users sometimes report less developed testing frameworks than Python and potentially cryptic debug messages. This, however, is an area under active development, with new tools and methodologies continuously emerging to enhance the development experience for Julia scientific computing.

In conclusion, Julia presents a compelling and evolving solution for high-performance scientific computing. It uniquely brings together the speed of compiled languages and the clarity of dynamic languages, thus addressing a long-standing challenge for scientists and developers. While it still faces challenges related to its nascent tooling, startup delays, and adoption rate compared to more established languages, its foundational design philosophy and impressive technical capabilities make it a truly powerful tool. For demanding Julia scientific computing tasks, advanced machine learning, and complex data analysis, Julia is not merely another option; it is, in fact, a leading choice, poised to accelerate new discoveries and innovations.

What are the most challenging computational problems in your field, and how do you envision a language like Julia helping you overcome them?