Imagine building a complex LEGO castle with thousands of pieces, each serving a specific purpose. Traditionally, you would gather the pieces, follow instructions, and build the castle on a table meant only for it. Moving it later would mean carefully taking it apart and then reassembling it in the new location. However, this traditional method often led to problems; for instance, a piece that worked on one table might fail on another. This analogy, therefore, helps to understand the challenges that Docker and Kubernetes were created to solve.

Modern application development faced similar problems: developers built software that ran well on their machines, but it often broke when deployed to another server. This common issue, known as the “works on my machine” problem, wasted countless hours. Eventually, a new approach arrived: containerization. This method specifically packages your app and all its necessities—including code, runtime, system tools, and libraries—into a single, separate unit called a container. In essence, it’s like putting your LEGO castle into a standard, sealed box that ensures your castle will look and work the same way, regardless of where you open it.

At the forefront of this revolution are two powerful technologies: Docker and Kubernetes. Working together, they fundamentally change how we build, deploy, and manage applications today. Docker helps you create these standard boxes, called containers. Kubernetes then acts as a smart manager, ensuring your boxes are delivered, opened, scaled, and maintained effectively across many locations. Together, they form a robust solution that brings significant consistency, scalability, and efficiency to software development. This powerful combination of Docker and Kubernetes has become indispensable.

Containerization: A Foundation for Docker and Kubernetes

Containerization is more than a buzzword; it’s a pivotal shift in how we build software, solving many problems that developers and operations teams have faced for decades. By isolating applications, containers eliminate problems caused by disparate environments and dependencies, thereby making the entire development and deployment process much smoother.

Understanding Containerization for Docker and Kubernetes

Think of a container as a lightweight, isolated software package that can run independently. Specifically, this package includes everything an app needs to run: its code, runtime, system tools, libraries, and settings. Although each container runs in isolation, it shares the OS kernel) with other containers. Yet, it maintains its own filesystem, processes, and network interfaces. This isolation, consequently, prevents conflicts between applications and ensures an app always works the same way.

Virtual machines (VMs) virtualize an entire hardware stack, running a full operating system for each application. In contrast, containers are significantly more efficient than VMs; they are smaller, start up faster, and consume fewer resources. This efficiency makes them ideal for modern applications, particularly distributed ones often called microservices. In a microservices architecture, an application is split into many smaller, independent services, each running in its own container. This setup works exceptionally well for deployments using Docker and Kubernetes.

Why Modern Apps Need Containerization and Orchestration with Docker and Kubernetes

Today’s applications are rarely simple programs. They do not run in isolation; instead, they are complex systems that need to handle millions of users, process vast amounts of data, and scale up or down instantly. However, traditional deployment methods often struggle with these needs. For example, managing dependencies becomes a significant hurdle, ensuring compatibility across servers poses another common challenge, and rapidly deploying updates also presents difficulties.

Containerization directly solves these problems by providing a consistent environment. This means what works on your laptop also works in testing and production. This consistency thus greatly reduces bugs, particularly those often due to environmental differences. Furthermore, containers offer isolation, which improves security by limiting the impact in case of a breach. Consequently, if one container is attacked, it’s harder for a hacker to reach other parts of your system. Such reliability and agility are vital, especially when combined with Docker and Kubernetes. Ultimately, this helps businesses innovate rapidly and stay competitive.

Docker: Crafting the Perfect Package

Docker pioneered containerization, offering a robust platform that helps build, package, and run single containers. In essence, it simplifies the entire process, including the creation of these isolated application environments. Hence, Docker made container technology accessible for all developers, turning a complex idea into a simple, user-friendly tool. Furthermore, when used with Kubernetes, Docker’s role becomes even more critical.

Docker’s Building Blocks for Containerized Applications

At its heart, Docker provides tools that help define how your application should be packaged. It starts with a simple, yet powerful, text file called a Dockerfile. This file contains instructions that tell Docker how to build an image of your application. Specifically, an image is a read-only template that contains all the necessary instructions for creating a container. In other words, think of a Dockerfile as a recipe, and the image as the prepared, uncooked meal.

For example, a Dockerfile might specify:

- Which base operating system image to use (e.g., Ubuntu, Alpine Linux).

- Which software dependencies to install (e.g., Python, Node.js, Java).

- Your application code.

- How to configure the environment.

- Which command to run when the container starts.

This clear approach ensures the same result every time you build your application. It fully eliminates manual setup steps and human errors from the deployment process.

Mastering Dockerfiles and Container Images

Dockerfiles are powerful, capable of creating immutable container images. This means an image cannot be changed once built. Consequently, if you need to update your application, you build a new image with the necessary changes, rather than altering the old one. This immutable approach is crucial for consistency and reliability, ensuring you know exactly what’s inside your package.

These images are lightweight and built in layers, so when you make a change, only the modified layer needs updating. Ultimately, this makes image distribution highly efficient. Once a Docker image is created, you can push it to a Docker registry. Docker Hub, for example, is a central repository that lets you easily share your application images with others. This global reach, therefore, is a key reason why Docker is so widely used, allowing you to deploy them across different servers.

Docker Solves the “Works on My Machine” Problem

Docker effectively eliminates the frustrating “works on my machine” problem. By including all dependencies in the container image, Docker ensures your application runs the same way, irrespective of the underlying environment. Specifically, the Docker container provides a consistent environment, whether it runs on a laptop, a testing server, or a production cloud.

This consistency saves considerable time and reduces debugging efforts. Developers can be confident their code will work as planned as it moves through development stages. Similarly, operations teams benefit from predictable deployments, making releases smoother and less error-prone. Indeed, Docker’s straightforward approach to packaging applications has made it a pivotal tool for many companies, while also preparing the way for advanced orchestration with Kubernetes.

Kubernetes: Orchestrating Docker Containers at Scale

Docker excels at creating and managing single containers. However, one container is often insufficient for modern applications, as most require many—sometimes hundreds or thousands—to work together. Inevitably, managing these containers manually across many servers quickly becomes exceedingly complex. This is where Kubernetes steps in, providing robust orchestration for Docker containers.

Why Kubernetes Goes Beyond Individual Docker Containers

Imagine having many Docker containers that you need to deploy. Ensuring they can communicate is vital. You must restart them if they crash, scale them up for more traffic, and upgrade them without stopping service. However, doing all this manually is not only tedious but also error-prone. Kubernetes (K8s) is, consequently, an open-source platform specifically designed to automate these tasks.

It takes over the arduous work of deploying, scaling, and managing containerized applications across many machines in a cluster. To achieve this, Kubernetes provides a robust framework that helps define how your containers should run and interact. Indeed, it continuously strives to maintain your desired setup. This allows developers and operations teams to focus on building and improving applications, rather than spending time on managing the underlying infrastructure. The synergy between Docker and Kubernetes makes this possible.

Kubernetes as Your Cluster’s Operating System

Think of Kubernetes as an “operating system” for your entire cluster of machines, allowing you to manage the cluster as a single computing resource instead of managing each server individually. Furthermore, Kubernetes abstracts away the complex infrastructure, letting you deploy applications without needing to worry about which specific server they run on. This integration helps you leverage the full power of Docker and Kubernetes.

Key components of Kubernetes include:

- Nodes: The actual machines (physical or virtual) that run your containers.

- Pods: These represent the smallest deployable units in Kubernetes. Typically, a Pod holds one or more containers (usually one) and manages their shared resources.

- Services: They define a group of Pods and set rules for how to access them, ultimately providing stable network access to your applications.

- Controllers: These ensure your applications stay in their desired state, handling scaling, self-healing, and updates automatically.

Indeed, these abstractions make it easier for Kubernetes to deploy and manage distributed applications effectively.

Kubernetes: Managing Container Orchestration Complexity

Kubernetes automatically handles many complex tasks, for example:

- Scheduling: It intelligently decides which node in the cluster is best for a new container, basing this on available resources and other rules.

- Load Balancing: It distributes incoming network traffic across multiple instances of your application, ensuring high performance and continuous availability.

- Self-Healing: If a container or even an entire node fails, Kubernetes automatically detects the issue and then remediates it. For example, it restarts the container or moves it to a healthy node. This proactive approach ensures continuous operation.

- Automated Rollouts and Rollbacks: It allows you to deploy new application versions with no downtime, and you can easily revert to a past version if problems occur.

- Resource Management: It ensures containers receive necessary resources, while also preventing any single container from monopolizing them.

This advanced management thus helps operate even very large and complex applications with greater reliability and less manual effort. Indeed, it is a game-changer for companies that need to scale rapidly and maintain high uptime, especially when combined with Docker’s robust packaging.

The Powerful Synergy: Docker and Kubernetes Working Together

Newcomers often wonder if Docker and Kubernetes compete, or if they are rival technologies. The truth is, they complement each other exceptionally well. In reality, they handle different, yet equally vital, stages of an application’s lifecycle. While Docker sets the foundation, Kubernetes builds the main structure on top of it. The combined strength of Docker and Kubernetes creates a truly powerful platform.

The Harmonious Partnership of Docker and Kubernetes

Think of Docker as a skilled builder, creating perfect, standard shipping containers for your goods. These containers are well-sealed and ready for transport. Kubernetes, on the other hand, is like a smart port manager. It moves, organizes, and loads these containers onto ships, ensuring they reach their designated location efficiently. It also manages the entire port, including upkeep, scaling resources, and resolving problems.

Docker creates container images, which are the blueprints for your packaged applications. It also provides the `docker run` command to start single containers. Kubernetes then takes these Docker images (while it can also use images from other container runtimes) and orchestrates them at scale. Specifically, Kubernetes uses the images Docker produces to deploy, manage, and scale your applications across a cluster. Evidently, without Docker or a similar runtime, Kubernetes would have no containers to manage. Conversely, without Kubernetes, managing numerous Docker containers in production would be an arduous manual task. This partnership defines modern cloud-native development with Docker and Kubernetes.

Seamless Development to Production with Docker and Kubernetes

Docker and Kubernetes work together seamlessly, creating a highly smooth workflow that extends from a developer’s machine all the way to large production deployments. Developers, for instance, use Docker to build and test their applications in containers locally. This local container environment thus closely mimics the production environment.

When the application is ready, the Docker image is pushed to a central registry. Kubernetes then takes over, pulling that image from the registry and deploying it onto the cluster, thereby leveraging all orchestration benefits. This seamless transition reduces integration problems, speeds up the entire development and deployment process, and creates a consistent flow. Consequently, this greatly reduces friction and ensures predictability across the entire software lifecycle. This is a core benefit of adopting Docker and Kubernetes.

Local Development with Docker Desktop

Docker Desktop makes this synergy more accessible. It is a popular application for running Docker on Windows and macOS, and it often includes a separate Kubernetes server. This feature, therefore, allows developers to test their applications in a Kubernetes environment right on their local machines. This is highly useful as it ensures applications work correctly when deployed to a larger Kubernetes cluster later.

A local Kubernetes instance allows developers to practice deploying services, setting up networking, and understanding application performance, all within a Kubernetes environment. Essentially, this local setup acts like a small production environment, offering an invaluable sandbox for development and early testing. In conclusion, it effectively closes the gap between single Docker container development and large cluster deployments.

Key Benefits of Docker and Kubernetes for Business

The combined power of Docker and Kubernetes is not just a technological marvel; it brings tangible business benefits. Companies adopting this approach experience enhanced agility, reliability, and cost-efficiency—factors crucial in today’s rapidly evolving digital world.

Resilience and Scalability with Docker and Kubernetes

One key benefit is the ability to easily scale applications up or down based on demand. For example, Kubernetes continuously monitors the load on your applications. If traffic spikes, it can automatically start more containers to handle the extra demand. Conversely, when traffic slows, it can scale them back down, thereby conserving computing resources and costs.

Moreover, this combination greatly boosts application resilience. Kubernetes continuously monitors the health of your containers. If a container crashes or stops responding, Kubernetes automatically restarts it or replaces it with a new, healthy one. This self-healing, consequently, limits downtime and ensures your applications are available to users at all times, even if unexpected failures occur. This marks a crucial advantage of Docker and Kubernetes.

Unmatched Portability of Docker and Kubernetes Deployments

Docker containers are inherently portable. Once an application is packaged in a Docker container, it can run on any system with Docker. Thus, your application can easily move from a laptop to a data center, to a public cloud like AWS, Google Cloud, or Azure, or even to a hybrid cloud setup.

Kubernetes enhances this portability by offering a consistent deployment platform. In other words, Kubernetes provides a single, unified way to deploy and manage your applications, regardless of where your cluster infrastructure resides. This consistent experience eliminates worries about vendor lock-in and gives businesses the freedom to choose the best environment without altering their applications. The portability enabled by Docker and Kubernetes is truly unique.

Accelerating CI/CD with Docker and Kubernetes

Docker and Kubernetes revolutionize Continuous Integration and Continuous Delivery (CI/CD) pipelines, making them significantly more efficient. With containers, building, testing, and deploying become highly automated and reliable. Developers can rapidly build new Docker images for every code change, and these images are then automatically tested.

Once tests pass, Kubernetes can swiftly deploy these new versions to production. This automation greatly reduces the time it takes to deliver new features and updates to users. Furthermore, manual work is minimized, leading to fewer errors and faster cycles of change. As a result, businesses can respond to market needs very rapidly and deliver value to customers more frequently.

Resource Management and Cost Savings with Kubernetes

Kubernetes intelligently assigns resources to containers within a cluster, ensuring applications receive the CPU, memory, and storage they need without allocating excessive resources. Moreover, this efficient resource utilization helps reduce waste, as you only pay for what your applications actually consume.

By packing numerous containers onto fewer machines, companies can achieve higher density, leading to lower costs. Furthermore, Kubernetes’ automation minimizes the need for extensive manual management, freeing up IT staff to focus on more strategic initiatives rather than routine maintenance. Overall, these efficiencies lead to significant cost savings for the organization.

Kubernetes’ Automatic Healing for Constant Uptime

One valuable aspect of Kubernetes is its built-in self-healing. Imagine a server suddenly stops working. In a traditional setup, this could halt the application and require manual intervention to restore service. However, with Kubernetes, the platform autonomously detects the failure.

Instead, it reschedules the affected containers onto healthy nodes. This often occurs without the user noticing any interruption. This rapid, automatic fault tolerance is key; it helps keep business-critical applications highly available and ensures your services continue working, even if individual components fail. Ultimately, this provides a robust and reliable foundation for your digital services.

Achieving True Consistency with Docker and Kubernetes

Together, Docker and Kubernetes finally deliver truly consistent environments. First, Docker ensures the application package is consistent. Then, Kubernetes offers a consistent platform for deploying and running these packages, irrespective of the underlying infrastructure.

This consistency completely eliminates the “it works on my machine” problem. It means what you build and test locally is exactly what goes to production. Moreover, this predictability streamlines troubleshooting and reduces integration problems. Furthermore, it greatly improves the overall reliability of your software delivery, thereby building trust across development, testing, and operations teams. This makes Docker and Kubernetes an exceptionally strong pair.

https://www.youtube.com/watch?v=9s3hGVzZc

Navigating Docker and Kubernetes: Challenges and Solutions

Docker and Kubernetes offer immense benefits, but their adoption also presents challenges. Like any powerful technology, they come with a learning curve and require careful consideration regarding their implementation. Therefore, understanding these challenges early helps you plan a smoother journey for adopting Docker and Kubernetes.

Taming the Docker and Kubernetes Learning Curve

Both Docker and, especially, Kubernetes, present a steep learning curve. Kubernetes, in particular, is an extensive system with numerous components, concepts, and configurations. Consequently, developers and operations teams need to embrace new methodologies, including declarative configuration, microservices architecture, and cloud-native patterns, all of which require a significant investment in training and education.

Smart Solution: First, start small. Begin with simpler applications or non-critical services to gain experience. Leverage online courses, certifications, and community support. Consider managed Kubernetes services from cloud providers (like EKS, AKS, GKE), which can initially alleviate some of the management burden. Ultimately, a gradual adoption allows your team to build skills steadily with Docker and Kubernetes.

Keeping Docker Images Lean and Secure

Docker images can easily become bloated if not managed effectively. For example, including unnecessary files or layers can increase image size, leading to longer downloads and higher storage costs. Moreover, images often rely on base images and third-party libraries, which can introduce security vulnerabilities. If not updated or scanned regularly, these vulnerabilities pose a significant risk.

Smart Solution: First, utilize multi-stage Docker builds to create smaller, more efficient images. Next, prefer minimal base images like Alpine Linux. Integrate regular image scanning tools into your CI/CD pipeline to detect and address vulnerabilities early. Additionally, keep your base images and dependencies updated to patch known security flaws. Finally, focus on “least privilege” rules when setting container permissions for Docker.

Solving Persistent Data Challenges in Docker and Kubernetes

Containers are designed to be short-lived, meaning they can be created and destroyed quickly. Any data stored inside a container is lost when it stops. This ephemeral nature, consequently, makes managing persistent data, such as databases or user uploads, particularly challenging. Therefore, you cannot store important data directly inside the container.

Smart Solution: Instead, use Docker volumes for single containers. For cluster-wide storage, leverage Kubernetes Persistent Volumes (PVs) and Persistent Volume Claims (PVCs). These methods allow you to attach external storage to your containers, ensuring data remains safe during container restarts or replacements. Furthermore, cloud-native storage solutions integrate seamlessly with Kubernetes and are excellent choices for managing data at scale.

Mastering Container Networking for Docker and Kubernetes

Networking between containers can be complex, especially in large, distributed deployments. Containers need to communicate with each other, a process that often occurs across different nodes. Moreover, exposing applications to the outside world requires careful consideration, as does securing these network paths. Ultimately, this necessitates a meticulous setup of network policies, load balancers, and service meshes.

Smart Solution: First, thoroughly understand Kubernetes networking concepts like Services, Ingress, and Network Policies. Then, leverage Service Meshes (e.g., Istio, Linkerd) to assist with advanced traffic management, security, and observability in microservices. Finally, design your network with security as a paramount concern: separate traffic and apply strict firewall rules, especially between different application components managed by Docker and Kubernetes.

Monitoring and Logging Docker and Kubernetes Environments

In a dynamic container environment, traditional monitoring tools may fall short. Containers are constantly being created, destroyed, and moved. Therefore, observing application performance, diagnosing issues, and tracking container actions requires specialized tools and strategies. Moreover, it’s challenging to track logs from ephemeral containers or effectively monitor resource utilization across a constantly changing cluster.

Smart Solution: First, establish a central logging solution (e.g., ELK Stack, Splunk, Loki) that aggregates logs from all containers. Then, implement robust monitoring tools such as Prometheus, Grafana, Datadog, and New Relic. These are designed for container environments and track CPU, memory, network, and application performance metrics. Finally, ensure you configure alerts for critical events. Ultimately, this enables you to respond swiftly to problems in your Docker and Kubernetes setup.

Market Adoption and Future Growth of Docker and Kubernetes

The widespread adoption of Docker and Kubernetes is not merely anecdotal; it is substantiated by robust market data. These technologies have, in fact, profoundly transformed software development and operations and are now integral components for businesses across all industries. The future of cloud-native development is intimately linked with Docker and Kubernetes.

Docker’s Foundation in the Industry

Docker has become the primary standard for containerization. Its intuitive design and powerful features have made it indispensable for developers everywhere. Market share numbers consistently place Docker at the forefront of containerization.

| Metric | Value |

|---|---|

| Market Share (among tools) | 83.18% – 87.67% |

| Companies Using Docker | Over 108,456 worldwide |

| US Customer Percentage | 44.24% |

These numbers underscore Docker’s pivotal role. It is the primary tool for creating portable, consistent application packages that power modern cloud-native systems. Its widespread adoption thus demonstrates its proven value and ease of use in producing container images, which Kubernetes subsequently manages.

Kubernetes’ Dominance in Orchestration

While Docker provides the containers, Kubernetes leads in managing them on a large scale. Its robust features for automation, scaling, and management have made it the undisputed leader in container orchestration.

| Metric | Value |

|---|---|

| Market Share (among orchestration tools) | 92% (Estimated) / 83% (Datadog) |

| Fortune 100 Companies Using Kubernetes | Over 50% |

| Small/Medium Organizations Using Kubernetes | 78% |

| Enterprise Adoption Increase (2019 to 2023) | From 78% to 96% (CNCF Report) |

Large enterprises are rapidly adopting Kubernetes, as reported by the Cloud Native Computing Foundation (CNCF). This demonstrates Kubernetes’ pivotal role in modern IT strategies, as this technology effectively manages complex, distributed systems. Consequently, this makes it an indispensable tool for all businesses embracing cloud-native principles, especially when combined with Docker.

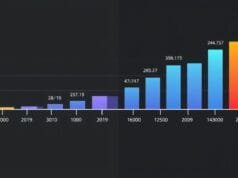

Growth Projections for Docker and Kubernetes Ecosystems

The growth of the Docker and Kubernetes ecosystems shows no signs of slowing. The global Kubernetes market is poised for substantial growth, indicating ongoing investment and expansion in container infrastructure.

- Global Kubernetes Market Value (2022): USD 1.8 billion

- Projected Global Kubernetes Market Value (2030): USD 7.8 billion

- Compound Annual Growth Rate (CAGR): 23.40%

Moreover, securing these environments is a key driver of market growth. The market for container and Kubernetes security was USD 1.195 billion in 2022 and is indeed expected to grow significantly. These numbers, therefore, clearly demonstrate a strong and lasting commitment to containerization as a core part of future digital infrastructure. Furthermore, the robust ecosystem surrounding these technologies assures businesses of ongoing innovation and support for Docker and Kubernetes.

Your Path Forward with Docker and Kubernetes

Adopting Docker and Kubernetes can seem daunting at first, but the rewards are substantial. By leveraging these technologies, you empower your teams to build more resilient, scalable, and portable applications, thereby accelerating development, reducing operational costs, and delivering superior software to users more rapidly.

Starting your journey typically involves:

- Educating Your Team: Invest in comprehensive training to educate both developers and operations staff on core Docker and Kubernetes concepts.

- Containerizing Existing Applications: Begin by packaging simpler applications into Docker containers.

- Experimenting with Kubernetes: Start with a managed Kubernetes service or deploy a small local cluster to understand its orchestration capabilities.

- Implementing CI/CD: Integrate container builds and Kubernetes deployments into your automated pipelines.

- Focusing on Observability: Establish robust monitoring, logging, and alerting systems from the outset for your Docker and Kubernetes environments.

Remember, this is an evolution, not an overnight revolution. Therefore, take small steps, celebrate incremental wins, and learn from each deployment. Ultimately, fully embracing cloud-native containerization with Docker and Kubernetes will transform your software delivery and position your company for future success.

The combined power of Docker and Kubernetes forms the foundation of modern cloud-native application development. They offer a comprehensive solution for packaging, deploying, and managing applications, providing unmatched efficiency and reliability. While challenges certainly exist, the benefits are immense. Indeed, enhanced scalability, portability, and agility significantly outweigh the initial effort required to learn and adopt Docker and Kubernetes.

How do you envision Docker and Kubernetes reshaping your team’s development and deployment workflows in the coming year?