Master Prompt Engineering to unlock AI’s true potential, crafting precise prompts for optimal, accurate results and effective, valuable AI interactions.

Imagine you have a brilliant but sometimes literal assistant. To get the best work from them, you wouldn’t just mumble vague instructions. Instead, you would provide clear goals, context, examples, and specific guidelines. This is precisely what prompt engineering does for artificial intelligence, especially with powerful tools like large language models (LLMs). It’s an important skill that transforms AI’s raw power into useful, accurate, and relevant results.

Prompt engineering is not merely a technical task; it’s a blend of creativity and strategic thinking. It involves carefully designing and refining the inputs, or “prompts,” you provide to an AI. Your ultimate goal is to guide the model to create the exact output you want, ensuring it is helpful, accurate, and safe. In essence, this iterative process is key, bridging your intent with the machine’s output.

Why Prompt Engineering is Your AI Superpower

The quality of an AI’s output is directly linked to the clarity and precision of your input prompt. To illustrate, consider it akin to a recipe. If your recipe is vague, the result might be completely different from what you imagined. Similarly, a poorly constructed prompt can lead to confusing, irrelevant, or even incorrect AI responses.

Well-crafted prompts, however, unlock AI’s true power. They lead to responses that are more accurate, relevant, and easier to understand. This efficiency is crucial. It means you get the targeted information you need faster. Moreover, the AI can handle more complex tasks with greater success. Ultimately, prompt engineering facilitates effective communication with intelligent machines, transforming them into vital tools for various applications.

Bridging Human Intent and Machine Action with Prompt Engineering

A significant challenge with AI is ensuring the machine truly comprehends your underlying goal. AI models, particularly LLMs, work by guessing the next most likely word or words. They base this on the data from which they were trained. Thus, they don’t inherently “know” what you mean. Hence, human input is vital.

This is precisely where prompt engineering becomes crucial. By carefully structuring your prompt, you embed your human intent directly into the AI’s process. In other words, you guide its word prediction. This, in turn, steers it away from generic answers and towards the detailed, specific output you desire. This meticulous design is central to prompt engineering. It helps the AI move beyond simple text generation to genuinely effective problem-solving.

How Prompt Engineering Directly Links to Quality and Relevance

Consider the difference between asking, “Tell me about cars,” and “As an automotive journalist, explain the key differences between electric vehicles and traditional gasoline cars, focusing on performance, environmental impact, and refueling time, suitable for a general audience.” Clearly, the second prompt is far more likely to yield a high-quality, relevant, and well-organized answer.

Prompt engineering directly impacts the output’s accuracy and relevance. It specifically reduces the chances of the AI misunderstanding your request. Moreover, it prevents the AI from providing information that is technically correct but not useful for your specific needs. This precision is more than merely convenient. In fact, it is crucial for deploying AI in critical tasks. Furthermore, it saves considerable time and effort in refining the output yourself. Effective prompting significantly improves your overall experience with AI.

The Building Blocks of Effective Prompt Engineering

Every effective prompt is built upon a few core elements. By mastering these elements, you can consistently guide AI models to produce optimal results. Think of them as essential ingredients for a successful AI interaction. Incorporating these elements provides the AI with all the information it needs to perform at its best, truly showcasing the power of prompt engineering.

This structured approach simplifies the complex task of AI communication. Moreover, you gain control over the output, thereby ensuring it matches what you expect.

Laying a Foundation with Clear Goals

Before you even start typing, define your goal. What do you want the AI to achieve? What specific action should it take? Using strong action verbs ultimately helps clarify your intent for the model. For instance, instead of “Information about marketing,” try “Summarize key digital marketing trends from 2023” or “Generate five creative taglines for a new organic coffee brand.”

Clear objectives prevent ambiguous answers. They also guide the AI toward a meaningful response. This is therefore the first and most crucial step in crafting an effective prompt. Without a clear goal, the AI might, for example, stray off-topic or provide unhelpful content. This underscores the importance of focused prompt engineering.

Supplying Crucial Context

AI models require context to fully grasp the background and nuances of your request. Provide relevant facts, data, or even a scenario that frames the task. For example, if you’re asking for advice, explain your current situation. Likewise, if you’re requesting a comparison, provide the necessary items to compare. In essence, context is indispensable.

Context helps the AI generate more informed and detailed responses, thereby avoiding generic statements. It helps the model understand the “why” behind your request. Consequently, this leads to more intelligent, tailored responses through effective prompt engineering.

Precision in Every Word

Ambiguity hinders optimal AI output. Therefore, employ precise language and avoid vague terms. Be specific about what you need. For instance, this might include details like the desired format (e.g., “bullet points,” “a formal report,” “JSON”), length limits (e.g., “under 200 words,” “two paragraphs”), or specific regions or timeframes (e.g., “focus on the European market,” “data from 2020-2022”). Hence, clarity is paramount.

A magnifying glass hovering over a computer screen displaying a prompt, emphasizing the need for specificity and detail in the text.

Every detail you add helps narrow the AI’s search for information. In other words, it ensures the output is highly specific and aligns with your exact needs. Precision, therefore, is a core tenet of prompt engineering. It minimizes the AI’s need for inference.

Few-Shot Prompting in Prompt Engineering

Sometimes, the best way to explain what you want is to show it. Few-shot prompting involves including a small number of input-output examples within your prompt. Essentially, this helps the AI grasp the desired style, tone, or pattern. For example, if you desire product descriptions in a specific style, provide one or two existing examples.

This technique is incredibly powerful for guiding the model toward complex or very specific answers. The AI observes the pattern and then endeavors to replicate it for your new request. Thus, it’s akin to giving your assistant a couple of finished reports and saying, “Do more like these,” a direct application of effective prompt engineering.

Setting the Rules: Instructions and Constraints

Clearly define the task and any limitations or requirements for the output. What should the AI do? What should it not do? For instance, you might instruct, “Do not include personal opinions,” or “Make sure the language is easy to understand to a high school student.” These guidelines are ultimately critical.

These instructions act as guardrails, preventing the AI from deviating off course. Moreover, they ensure the output meets your quality standards and specific requirements. Clearly stated constraints, therefore, help the AI comprehend the limits within which it must operate. Consequently, this yields responses that adhere to your rules and prove useful in prompt engineering.

Stepping into Character: Persona Assignment

Asking the AI to assume a particular role or viewpoint can significantly alter the response style and content. This is known as role-based prompting. For example, you could say, “Act as an experienced financial advisor” or “Respond as a questioning journalist.” This technique greatly enhances relevance.

This technique encourages the AI to take on a specific tone, vocabulary, and perspective. As a result, the output becomes more tailored for your audience or purpose. Moreover, it allows the AI to provide insights specifically crafted for a certain expertise or viewpoint, an advanced aspect of prompt engineering.

Unlocking Advanced Prompt Engineering Capabilities

While the core elements form the foundation, advanced prompt engineering techniques enable you to push AI models to new capabilities. These methods foster more sophisticated thinking, improve problem-solving, and enhance the understanding of complex requests. By employing these advanced strategies, you can therefore transform AI from a simple text generator into a powerful, intelligent partner.

Learning these techniques is like upgrading your toolkit; in effect, you gain access to more specialized tools. These can handle complex tasks with greater proficiency. Consequently, they significantly enhance the quality and depth of AI interactions through advanced prompt engineering. This mastery is ultimately invaluable.

Thinking Step-by-Step: Chain-of-Thought (CoT)

Complex questions often require step-by-step reasoning. Chain-of-Thought (CoT) prompting encourages the AI to decompose a problem into smaller, logical components and articulate its reasoning process. For example, you might prompt, “Let’s think step by step,” before asking a multi-stage question. Thus, it elucidates the AI’s reasoning.

This technique aids the AI in solving complex problems. It also provides transparency. You can observe how the AI arrived at its answer. This makes it easier to find mistakes or understand its thinking. It mimics human thought processes, leading to more robust and transparent solutions in this field.

Direct and Concise: Zero-Shot Prompting

For straightforward tasks, you can use only what the model already knows without providing any examples. This is called zero-shot prompting. You provide direct instructions, and the AI leverages its broad language knowledge to generate a response. For example, “Translate ‘hello’ to Spanish.” Hence, it’s efficient.

Zero-shot prompting is effective for simple tasks where the AI doesn’t need to learn a new pattern or style. It effectively utilizes the model’s inherent abilities. However, while powerful, it is important to note that it is best suited for clear, simple requests. It represents a foundational approach to prompt construction.

Exploring Multiple Paths: Tree-of-Thought and Maieutic Prompting

Building on Chain-of-Thought, Tree-of-Thought and Maieutic Prompting are broader methods that encourage the AI to explore multiple avenues of thought. Instead of a single linear chain, for instance, the AI might branch out, evaluate different approaches, and then converge on the optimal solution.

These techniques are particularly useful for highly ambiguous problems or creative tasks. Here, diverse perspectives can lead to superior results. Moreover, they allow the AI to fully explore possible solutions, embodying advanced prompting methodologies. Furthermore, this multifaceted approach can uncover novel insights that a linear process might overlook. This ultimately leads to more robust outputs.

Building a Narrative: Contextual Prompting

For long-form generation or detailed tasks, contextual prompting involves incrementally adding background details. You might start with a general overview, and then add specific details in subsequent conversational turns, or within a single, extensive prompt. This approach fosters deeper understanding.

This method helps the AI maintain clarity and depth in its longer responses. By consistently providing pertinent information, you ensure the AI remains focused. Moreover, it integrates all necessary details into its output. In essence, it mimics a deep conversation where new information refines the discourse, a key aspect of advanced prompting.

Meta Prompting: AI Aiding Prompt Engineering

Meta prompting elevates prompt design. Here, you instruct the AI to design or refine its own prompts. For example, you might ask, “Suggest better ways to phrase this question to get a more detailed answer about quantum physics.”

Importantly, this establishes a powerful feedback loop for refinement. The AI can assist you in improving your communication with it, leveraging its knowledge of prompt types and desired outcomes. This is, therefore, an advanced method. It can significantly accelerate your learning curve in prompt creation.

Iterative Prompt Engineering: The Refinement Loop

Prompt engineering is rarely a one-shot process. Iterative prompting involves continually refining your prompts based on the initial outputs you receive. First, you generate a response. Then, you evaluate it. Subsequently, you adjust your prompt to guide the AI closer to the desired response or style.

This iterative process is pivotal for achieving optimal results, especially for complex or detailed tasks. It acknowledges that AI does not always behave predictably. Moreover, it views improvement as a core strategy. Ultimately, each iteration brings you closer to perfection in prompt refinement.

Iteration and Experimentation in Prompt Engineering

Think of prompt engineering as a scientific experiment. You hypothesize a prompt, then test it, observe the results, and refine your hypothesis. This constant cycle of testing and refinement is not merely helpful. Rather, it’s vital for optimizing AI outputs. Therefore, perfection cannot be expected on the first attempt during this process.

The nature of AI models means that small changes in words, keyword choice, or even the order of instructions can profoundly alter the output. An experimental mindset is thus key to success in prompt design.

Why Your First Prompt is Rarely Your Best

It’s tempting to think that you can craft the perfect prompt right away. However, AI models are complex systems. Their responses can be significantly influenced by subtle details. For instance, your initial prompt might be too vague, too limiting, or simply not matching how the model best handles information. Hence, initial attempts are rarely perfect.

This is precisely why your first attempt is almost always a starting point, not the definitive solution in prompt development. Rather, it offers a foundation for learning and improvement. Embrace the idea that refinement is a natural and necessary part of the process.

The Scientific Method for Prompt Engineering

To excel at prompt engineering, therefore, employ a systematic method:

- Formulate a Hypothesis: First, based on your understanding of prompt elements and techniques, craft a prompt you hypothesize will work.

- Test: Next, submit the prompt to the AI model.

- Observe: Then, carefully analyze the AI’s output. Does it meet your criteria? Where does it fall short?

- Analyze: Next, identify the specific areas where the output needs improvement. Is it missing context, demonstrating an incorrect tone, or containing factual inaccuracies?

- Refine: Subsequently, modify your prompt based on your observations and analysis. Experiment with different phrasing, add or remove components, or apply a new technique.

- Repeat: Finally, go back to step 1 with your refined prompt.

This continuous cycle of testing and refinement helps achieve optimal AI interactions. Ultimately, you discern the nuances of a model and harness its full potential. The more you experiment with prompting, the more proficient you become.

Measuring Success in Prompt Engineering: Metrics and Insights

While prompt engineering often feels like an art, its effectiveness can and should be measured. Measuring prompt efficacy reveals its true worth. It also facilitates further improvement. Moreover, this scientific approach elevates prompt engineering, moving it from an intuition-based skill to a data-driven discipline.

Understanding these metrics allows you to set clear targets and fairly judge your prompting methods. It is therefore crucial for demonstrating the tangible benefits of your prompting efforts. In other words, it validates your work.

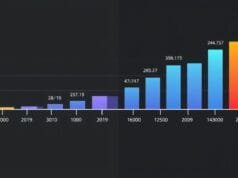

A dashboard displaying various performance metrics like accuracy, relevance, and user satisfaction, with graphs showing upward trends.

Measuring Prompt Engineering Effectiveness

Several key metrics help us assess how well a prompt performs in prompt engineering. For instance, consider these:

- Output Accuracy: How factually correct is the AI’s response? Aim for over 90%.

- Relevance: Does the response clearly address the prompt’s core question or task? Aim for over 80%.

- Efficiency: How quickly does the AI generate the desired response? How many iterations were required?

- Coherence: Is the response well-organized and easy to comprehend?

- Concision: Is the response succinct and to the point, yet complete?

- User Satisfaction: Do users find the response helpful and appropriate for their needs? This is a key measure of quality in this domain.

- Response Latency: How quickly does the AI produce a reply?

By tracking these metrics, you gain a comprehensive understanding of your prompting strategy’s success. This allows you to make informed decisions about which prompting methods work best for different situations.

The Impact of Smart Prompting

Real-world data demonstrates the substantial improvements that effective prompt engineering can yield. These statistics highlight the significant value of investing time and effort in this skill. It is thus a worthy investment.

Here’s a look at some key findings regarding the impact of effective prompting. Specifically, we observe the following:

| Metric | Improvement with Effective Prompt Engineering | Notes |

|---|---|---|

| Bias Reduction | Up to 25% | Achieved through neutral design and fairness assessments. |

| Precision, Recall, User Satisfaction | Quantitatively higher | Indicates enhanced overall output quality and user contentment. |

| Fewer Follow-Up Queries | Approximately 20% fewer | Signifies improved initial responses, reducing user effort. |

| Task Efficiency & Enhanced Outcomes | Users report greater success | Reflects improved workflow velocity and goal attainment. |

| Output Accuracy | Targets often above 90% | Strives for factual correctness. |

| Relevancy Score | Targets often exceeding 80% | Ensures the output directly addresses the prompt. |

These figures are not merely statistics; rather, they represent tangible benefits for individuals and organizations leveraging AI. Ultimately, they validate the “art and science” approach to prompting.

Navigating Variability and Inconsistency

Even with advancements, AI performance can exhibit significant variability, even with identical prompts. This unpredictable nature means that what works perfectly once might yield a slightly different result the next time. Overall performance metrics are useful; however, they can obscure subtle differences at the question level. This complicates prompt construction.

For example, research shows that striving for 100% perfect correctness can significantly degrade the performance of some advanced models. GPT-4o models, when constrained to absolute perfection, sometimes perform barely better than random guessing. However, at lower, more realistic accuracy levels (e.g., 51% accuracy), they perform significantly better. This necessitates finding a balance. Aim for high accuracy, but also comprehend the inherent limits and variability of current AI models.

The Dual Nature of Prompt Engineering: Art and Science

Prompt engineering is often described as both an art and a science, and for good reason. It demands creative prowess in language and expression. Additionally, it necessitates a systematic, planned approach to problem-solving. This blend ultimately renders it a fascinating yet challenging field within AI.

Understanding this duality is key to mastering this skill. It allows you to leverage both intuition and analytical skills when interacting with AI through this discipline. Hence, it’s a balanced approach.

The Creative Spark and Strategic Approach

The “art” of prompt engineering lies in the creative phrasing, the choice of analogies, and how language can influence AI comprehension. It involves exploring different tones, styles, and narrative structures to elicit the desired response. Sometimes, for example, a subtle alteration in phrasing can yield a significantly improved response. This creative aspect consequently draws on human linguistic intelligence and empathy.

The “science” aspect, however, requires a systematic, strategic approach. This encompasses understanding the inner workings of LLMs. Moreover, it entails meticulously analyzing responses to continually refine prompts. In other words, it involves hypothesis testing, data scrutiny (even informal), and incremental enhancements. This blend of intuition and rigor defines an “effective prompt designer.”

Your Role in Prompt Engineering as an AI Interpreter

Prompt engineers serve as a key link between end-users and LLMs. End-users have needs or questions, while LLMs possess vast knowledge but require guidance. In this role, you act as an interpreter, translating complex human needs into machine-understandable instructions. Specifically, you devise effective scripts, templates, and strategies for diverse situations. This ensures the AI’s capabilities are optimally utilized.

This bridging role is becoming increasingly vital as AI integrates into daily tasks. Prompt engineers ensure that AI’s power is utilized effectively and judiciously, transforming complex AI capabilities into tangible real-world solutions. You essentially become the AI’s guide, helping it navigate the complexities of human requests through intelligent prompt design.

Common Hurdles in Prompt Engineering and How to Overcome Them

Even with the best intentions, prompt engineering comes with its share of challenges. Recognizing these hurdles is the first step toward devising strategies to mitigate them. This consequently leads to more reliable and ethical AI interactions. Ultimately, understanding these common pitfalls can save significant frustration and yield superior results from AI.

Every prompt engineer will face these problems at some point. Therefore, preparing for them equips you to address them effectively. Foresight, indeed, is key.

Conquering Ambiguity

AI models struggle significantly with ambiguous or incomplete requests. If your prompt is open to multiple interpretations, the AI might select an unintended one. This, in turn, leads to suboptimal or unhelpful responses. For example, asking “Write about history” is excessively broad.

Strategy: Always strive for utmost specificity. Break down complex requests into smaller, clear parts. Define terms, provide context, and articulate your desired outcomes. Think about how a human might misunderstand your request. Then, explicitly clarify any potential ambiguities for the AI.

Averting Bias in AI Responses

AI models are trained on vast datasets, which frequently reflect existing societal biases. Without careful prompting, these biases can manifest in the AI’s responses. This can result in unfair, biased, or inaccurate responses. Consequently, prompt engineers play a pivotal role in mitigating this.

Strategy: Design prompts with neutrality in mind. Explicitly instruct the AI to avoid biased language or concepts. Conduct fairness assessments by providing prompts that explore diverse demographics or sensitive topics. Subsequently, scrutinize the responses for any indications of bias. Finally, refine prompts to actively promote fairness and inclusion in responses.

Navigating Token Limits

All LLMs have different “token limits.” These define the maximum text (input + output) they can process at once. Exceeding this limit can truncate responses or lead to errors. Long, detailed prompts or requests for extensive responses can, therefore, quickly reach these limits, posing a significant challenge in prompt creation.

Strategy: Strive for conciseness and clarity. If necessary, decompose extensive tasks into multiple, sequential prompts. Additionally, condense extensive background details to fit within the token limit. Moreover, experiment with different models, as their token limits can vary considerably. Ultimately, ensure every word is purposeful to convey maximum meaning within the available space.

Taming AI Inconsistency

Even with advancements, AI performance can exhibit significant fluctuations, even with identical prompts. Subtle prompt modifications might yield inconsistent effects. They might enhance performance in one instance, yet degrade it across a broader set of similar requests. Consequently, this inconsistency can be frustrating and render prompt refinement ambiguous. Consistency, therefore, remains a paramount goal.

Strategy: Acknowledge that some variability is inherent. Focus on robust prompting methods that demonstrate efficacy most of the time. Avoid striving for 100% perfection on every occasion. Commence with comprehensive testing using diverse examples. This helps identify prompts that consistently yield robust results. Moreover, recognize that aggregate results might obscure granular issues. Ultimately, iterative testing remains your indispensable ally in this ongoing process.

The Future Landscape of Prompt Engineering

Prompt engineering is not a static field. It is rapidly evolving alongside AI technology. As AI models advance in intelligence, our methods of interaction will also transform. This constant evolution therefore promises exciting new possibilities. Furthermore, it positions prompt engineering as a pivotal skill for the future.

Keeping up with these changes will be essential for anyone seeking to leverage AI effectively. The future, indeed, holds even more intelligent and intuitive ways to guide AI, thanks to emerging innovations in this field.

Prompt Engineering: A Foundational Skill for AI Literacy

AI is integrating into almost every industry and facet of daily life. Prompt Engineering is therefore becoming a foundational skill for “AI literacy.” Just as basic computer literacy is expected today, understanding how to effectively communicate with and guide AI will soon be a fundamental competency. Ultimately, it empowers individuals to harness AI’s capabilities rather than merely receiving its outputs.

This skill goes beyond tech jobs, becoming indispensable for content creators, marketers, strategists, educators, and even everyday users. It thus democratizes access to advanced AI capabilities, rendering this skill universally valuable.

Anticipating Evolving Prompt Engineering Techniques

The field of prompt engineering will continue to evolve with progress in natural language processing (NLP) and enhanced AI reasoning capabilities. New, more intuitive prompting methodologies will likely emerge. Consequently, these may require less manual refinement as models become more adept at understanding complex, nuanced requests inherently. Furthermore, the tools for automating and optimizing prompt creation will also become more sophisticated.

This means that while the core principles remain, the specific methods and optimal approaches to prompt design will transform. Continuous learning and adaptation will therefore be paramount for prompt engineers. Ultimately, continuous learning is vital.

The Rise of Multimodal and Adaptive Prompting

Emerging trends include enhanced contextual understanding in LLMs. This allows them to understand profound insights from extensive texts. Moreover, we are witnessing the development of adaptive prompting methods. Specifically, these dynamically adjust responses based on user input patterns, conversational history, or even real-time feedback. Imagine, for example, an AI that learns your preferred prompting style, demonstrating the evolving nature of AI interaction.

Furthermore, multimodal prompt engineering is becoming popular. Essentially, this integrates inputs from different data modalities, such as vision and natural language. For instance, you might prompt an AI with simultaneous “image and text” inputs to create a visual description or a creative story based on the picture. Ultimately, this ushers in novel paradigms for AI interaction.

Growing Demand for Prompt Engineering Expertise

As AI integrates into more industries and becomes a primary component of business operations, the demand for skilled prompt engineering experts will undeniably surge. These professionals will serve as a crucial interface between intelligent AI models and real-world applications. They will therefore translate business requirements into effective AI instructions.

Their expertise will be invaluable in maximizing the return on AI investments. They will ensure ethical deployment. They will also continually identify novel applications for AI. Ultimately, prompt engineers are emerging as indispensable architects of intelligent solutions.

Your Journey to Prompt Engineering Mastery

You now understand that prompt engineering is far more than merely typing a question into a chatbot. It is a meticulous, iterative, and profoundly powerful skill that determines the true value derived from AI. By leveraging its art and science, you therefore transform AI from an enigma into a helpful, capable partner.

The future of work and innovation will be closely linked with our proficiency in communicating with artificial intelligence. Mastering prompt engineering therefore positions you at the forefront of this revolution. Thus, embracing it is key.

Practical Tips for Getting Started with Prompt Engineering

If you’re ready to make your AI interactions better, here are some practical tips to start your path in this skill:

- Start Simple: Don’t endeavor to master every advanced technique simultaneously. Begin by focusing on clear goals, context, and specificity.

- Experiment Continually: Treat every prompt as a hypothesis. Alter one variable at a time (e.g., change a single word, add an example) and observe the outcomes.

- Maintain a Prompt Log: Document your successful prompts and the elements that contributed to their efficacy. This builds your personal “prompt library.”

- Consult AI Documentation: Understand the specific strengths and limitations of the AI models you are utilizing.

- Learn from the Community: Engage with prompting groups, forums, and experts. Observe best practices and emerging trends.

Continuing Your Exploration

The landscape of AI is perpetually evolving, and so too will optimal prompt engineering methodologies. Therefore, commit to continuous learning. Engage with new models, explore new techniques as they emerge, and stay curious. Your journey to prompt mastery is ultimately an ongoing adventure.

How do you envision prompting changing the way we interact with technology in our daily lives, and what ethical considerations do you think will become most prominent?