Artificial intelligence (AI) is rapidly transforming our world. From powering self-driving cars to instantly translating languages, AI applications demand significant computing power. However, developers often face a tough choice: embrace Python for its simplicity, or opt for a faster language like C++ or CUDA for peak performance? This challenge, often called the “two-language problem,” has long troubled AI innovators.

Imagine a world where such a choice isn’t necessary. Where you can write code with Python-like simplicity yet achieve speeds typically reserved for low-level languages. Fortunately, this vision is now a reality with Mojo AI. This exciting new programming language is designed specifically for artificial intelligence development. Developed by Modular Inc., a company co-founded by Chris Lattner (the creator of Swift and LLVM), Mojo AI promises to revolutionize how we build AI. In essence, Mojo AI aims to bridge developer productivity with raw performance, offering a unified approach to AI development.

Why This Language Addresses the “Two-Language Problem”

For years, AI developers have grappled with a persistent challenge. Python, with its straightforward syntax and vast ecosystem of libraries like TensorFlow and PyTorch, remains the primary choice for AI prototyping and research. It allows developers to rapidly iterate on ideas and build models. However, when these models transition from prototype to production, Python’s interpreted nature often introduces significant performance bottlenecks.

To achieve the necessary speed and efficiency for real-world AI applications, developers often rewrite performance-critical parts of their Python code using languages like C++ or CUDA. This process, however, is time-consuming, error-prone, and requires specialized skills. It also exacerbates the complex “two-language problem,” forcing developers to manage two disparate codebases and toolchains. As a result, this slows development and complicates maintenance. Mojo AI presents a compelling solution to this challenge.

The Performance Gap: How Mojo Outperforms Python

Python’s strengths lie in its adaptability and readability. However, these advantages come with a performance cost. As an interpreted language, Python executes line by line, rather than being fully compiled into machine code before execution. This interpretive overhead, coupled with its dynamic typing, inherently limits its performance, especially in computationally intensive AI tasks.

Consider this analogy: Python is like a comfortable city car, easy to drive for short trips. But for high-speed racing or heavy-duty hauling, you need something far more powerful and specialized. AI applications present similar demands. Whether for large language models or real-time image processing, they require your hardware to operate at peak efficiency. Mojo directly addresses this need, imbuing Python-like code with the speed of systems-level programming.

Core Strength: Blending Python’s Ease with Unrivaled Speed

Mojo AI is not just another new language; it’s a superset of Python. This means it understands Python’s semantics and interoperates seamlessly with existing Python code. Consequently, if you know Python, you already possess the foundational knowledge of Mojo AI. This offers a significant advantage: developers can leverage their existing Python expertise and millions of lines of code, including their preferred AI frameworks, eliminating the need to learn an entirely new paradigm.

The true power of Mojo AI comes from its ability to deliver dramatic leaps in execution speed. For example, tests show Mojo AI can be significantly faster than plain Python, even without special low-level adjustments. In some highly demanding AI tasks, especially those heavily reliant on kernels (small, critical code segments), it exhibits astonishing speed. Indeed, it has demonstrated speedups of up to 35,000 times faster than standard Python, and in some cases, even 68,000 times faster. These figures are not merely incremental improvements; they signify a monumental shift in the capabilities of AI development.

How This Language Achieves Such Dramatic Performance

The secret to Mojo AI‘s speed lies in several key design choices:

- Compiled Nature: Unlike Python, Mojo AI is a compiled language. This means your Mojo AI code is transformed directly into highly optimized machine code before it runs, eliminating the interpretive overhead that plagues Python.

- Direct Hardware Access: Mojo AI is designed for intimate hardware integration. It allows developers to write code that interacts directly with AI accelerators, CPUs, and GPUs, enabling precise control for maximum performance.

- MLIR Compiler Framework: At its heart, Mojo AI leverages the Multi-Level Intermediate Representation (MLIR) compiler framework. MLIR serves as a universal translator, allowing Mojo AI to generate highly efficient code for a wide array of AI hardware. This powerful feature will be explored in more detail shortly.

Ultimately, this combination allows Mojo AI to harness the full power of your hardware, delivering the speed required for the most demanding AI tasks. Moreover, it significantly enhances developer productivity, enabling you to focus on the AI problem itself, rather than on performance optimization bottlenecks.

A Unified Stack for Diverse AI Hardware

One of Mojo AI‘s most innovative aspects is its approach to hardware optimization. The landscape of AI hardware is incredibly diverse, utilizing CPUs for general tasks, GPUs for parallel processing, Tensor Processing Units (TPUs) for specialized deep learning, and various Application-Specific Integrated Circuits (ASICs). Each of these hardware types, however, demands distinct programming approaches and toolchains. This introduces considerable complexity, often leading to vendor lock-in and fragmented development efforts.

Mojo AI directly addresses this problem. It offers a “unified programming model,” meaning you can write both high-level AI application logic and low-level, hardware-specific kernels within the same language. Consequently, you no longer need to switch between Python for your app’s logic and CUDA for your GPU-accelerated tasks. Indeed, Mojo AI abstracts away the underlying complexities, allowing you to ‘write once, run efficiently anywhere’ across diverse hardware.

The Power of MLIR: A Universal Translator for AI Hardware

At the core of this unified approach lies MLIR. Think of MLIR as an ingenious, multi-level intermediate representation for computer hardware. Simply put, you write your Mojo AI code once, without needing separate implementations for NVIDIA GPUs, Google TPUs, or Intel CPUs. MLIR then optimizes it for peak performance on the target hardware. This capability, therefore, fundamentally alters the AI development landscape.

It means:

- No Vendor Lock-in: Firstly, developers are not constrained by a single hardware vendor’s ecosystem, nor are they tied to vendor-specific programming languages. Code written in Mojo AI can execute efficiently across a multitude of hardware types.

- Simplified Toolchains: Secondly, it significantly reduces the need for complex, hardware-specific toolchains, simplifying application development and deployment.

- Future-Proofing: Finally, as new AI hardware emerges, MLIR’s flexible design allows Mojo AI to adapt, generating efficient code for these novel architectures with greater ease.

Ultimately, MLIR helps Mojo AI consistently perform optimally, no matter the hardware. This significantly simplifies an AI developer’s workflow, allowing them to focus on the intelligence of their applications, rather than the intricacies of hardware optimization.

%252C%20illustrating%20the%20unified%20stack%20concept.?width=1280&height=720&seed=56757&nologo=true&private=true&enhance=true&referrer=polima.com)

Advanced Features Tailored for AI Excellence

Mojo AI isn’t just fast; it’s also replete with features designed to address the demanding requirements of modern AI development. These features empower developers to write AI applications that are more performant, robust, and scalable.

Efficient Memory Management in Mojo AI

In AI, especially with large datasets and complex models, efficient memory utilization is paramount. Inefficient memory usage can lead to performance degradation or even system crashes. To combat this, Mojo AI provides developers with fine-grained control over memory allocation and deallocation, much like lower-level languages such as C++ or Rust.

Mojo AI also incorporates an ownership and borrow checker, a concept popularized by Rust. This feature acts like an intelligent assistant that ensures correct and safe memory usage, significantly reducing the likelihood of hard-to-debug memory errors.

Progressive Typing in Mojo AI: Flexibility Meets Performance

Python’s dynamic typing is highly flexible, as it doesn’t always require explicit type declarations. However, this flexibility can sometimes introduce runtime overhead and complicate error detection in large-scale projects. To offer the best of both worlds, Mojo AI introduces “progressive typing,” which allows developers to choose:

- Dynamic Typing: For rapid prototyping and flexibility, just like Python.

- Static Typing: For performance-critical sections and enhanced error checking. By explicitly declaring variable types, Mojo AI can perform more aggressive optimizations during compilation, leading to faster execution and fewer runtime errors.

In short, this flexibility allows developers to optimize specific sections of their code for maximum speed, while also retaining the rapid development benefits of dynamic typing where appropriate. Thus, it offers the best of both worlds.

Built-In Concurrency and Parallelization

Modern AI workloads are inherently concurrent and parallel. For example, training deep learning models requires millions of parallelizable computations. To fully leverage multi-core CPUs and specialized accelerators, languages, therefore, need robust support for concurrency and parallelization.

Mojo AI provides built-in support for:

- Asynchronous Operations: Allows tasks to run in the background without blocking the main program’s execution.

- Concurrency: Efficiently manages multiple tasks that appear to execute simultaneously.

- Automatic Parallelization: Mojo AI can intelligently identify sections of your code that can be parallelized and optimize their execution across your hardware.

These features are crucial for high-throughput processing in AI tasks, from data preprocessing to model inference. As a result, they ensure that your AI applications can fully harness the power of today’s robust multi-core processors and accelerators.

Language-Integrated Auto-Tuning

Optimizing AI models for diverse hardware is often a complex and manual process, involving the manual tweaking of numerous parameters to achieve optimal configurations. However, Mojo AI simplifies this with “language-integrated auto-tuning.”

This feature, therefore, allows the language itself to automatically adjust parameters for optimal performance on the underlying hardware. Developers no longer need to manually experiment with various settings; instead, Mojo AI can intelligently adapt settings to achieve peak performance. As a result, this automation greatly assists in effective performance tuning, reducing development time and ensuring your AI models perform optimally across diverse environments.

Real-World Applications: Where Mojo AI Shines

Mojo AI‘s unique blend of speed and Pythonic syntax makes it exceptionally well-suited for high-performance AI tasks. Moreover, it is designed to tackle the most challenging problems across all areas of AI. Here are some key areas where Mojo AI is demonstrating its value:

- Image Recognition and Computer Vision: These tasks demand extremely rapid processing, including object detection in live video streams and analysis of complex medical images. Mojo AI‘s prowess in optimizing performance on GPUs and other accelerators makes it ideal for accelerating these visual AI tasks.

- Natural Language Processing (NLP): Similarly, understanding and generating human language requires substantial computational power, particularly with Large Language Models (LLMs). Mojo AI can significantly accelerate the training and inference phases for NLP models, leading to faster responses and more sophisticated language understanding.

- Speech Recognition: Instantly transcribing spoken words into text demands considerable processing power. Here, Mojo AI‘s speed benefits can lead to faster and more accurate speech recognition systems.

- Reinforcement Learning: Training agents through trial and error often necessitates millions of simulations. Mojo AI can dramatically accelerate these simulations, allowing researchers to build and test novel reinforcement learning algorithms much more rapidly.

- Data Preprocessing: Before any AI model can be trained, data often needs meticulous cleaning, transformation, and augmentation. This initial step can be a significant bottleneck, but Mojo AI‘s speed allows for significantly faster data handling, reducing the overall time to insight.

- Lastly, Mojo AI optimizes the entire AI workflow, encompassing everything from training deep neural networks to deploying them for real-time predictions (inference). This end-to-end optimization ensures AI applications are faster to develop and perform superiorly in deployment.

Key Applications of Mojo

In summary, Mojo AI provides a unified, high-performance language, empowering AI developers to achieve unprecedented results, unconstrained by performance limitations.

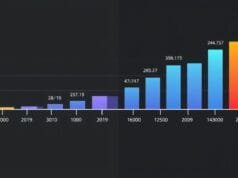

Mojo AI’s Rapid Growth and Impressive Numbers

Despite its relative youth, Mojo AI has garnered significant attention rapidly and is fostering a vibrant community. The numbers below underscore the growing interest and investment in this language of immense potential.

Modular, the company behind Mojo AI, has reported some impressive metrics:

| Metric | Value | Description |

|---|---|---|

| Active Community Members | 50,000+ | A thriving group of developers and enthusiasts actively engaging with Mojo AI. |

| Open-Source Code Lines (Ecosystem) | 750,000+ | Demonstrates significant development and contribution to Mojo AI‘s surrounding tools and libraries. |

| Performance vs. Python (Unoptimized) | 12x Faster | Mojo AI is naturally much quicker than Python even without specific low-level tuning. |

| Performance vs. Python (Kernels) | 35,000x – 68,000x Faster | In critical, highly optimized AI kernel operations, Mojo AI achieves astonishing speedups over Python. |

Clearly, these figures demonstrate the potential impact of Mojo AI. A community of over 50,000 members, for example, is a strong indicator of developer enthusiasm and the perceived utility of the language. Moreover, the substantial open-source code within its ecosystem reflects a collaborative effort to build the necessary tools and libraries for broad adoption. Finally, the remarkable performance benchmarks, particularly for kernel-dense tasks, underscore Mojo AI‘s ability to deliver on its promise of extreme speed for AI.

Navigating the Road Ahead for Mojo AI: Challenges and Perspectives

Mojo AI presents a compelling vision for the future of AI development. However, like any nascent technology, it faces its own set of challenges. Understanding these issues is crucial for developers considering its adoption and vital for Mojo AI‘s long-term success.

Maturity and Ecosystem: The Journey of a New AI Language

Mojo AI is still in its nascent stages; its public preview was only released in May 2023. As a result, its tooling, documentation, and overall ecosystem are still maturing. Python, in contrast, has been developed for decades and boasts an unparalleled suite of libraries (think NumPy, SciPy, Pandas, scikit-learn). Mojo AI‘s ecosystem is only just beginning to take shape.

For developers, this may translate to fewer off-the-shelf solutions, less extensive community support on forums, and perhaps less mature development tools. Building a robust ecosystem takes time, requiring sustained investment from Modular Inc. and contributions from a growing developer community. Therefore, early adopters will be instrumental in shaping Mojo AI‘s future.

The Learning Curve: Beyond Python’s Simplicity

Mojo AI‘s Python-like syntax makes it accessible to beginners, especially for skilled Python developers. However, fully leveraging its high-performance capabilities often necessitates an understanding of lower-level systems concepts such as memory management, type systems, and direct hardware interaction. These are typically less prevalent in conventional Python development.

For a developer accustomed to Python’s abstraction layers, this might translate into a steeper learning curve. It’s a trade-off: gain exceptional performance, but potentially at the cost of investing more time in understanding hardware and system internals. However, this deep understanding is what ultimately unlocks Mojo AI‘s full potential, distinguishing it from purely high-level scripting languages.

Open-Source Status and Future Trajectory

Modular open-sourced Mojo AI‘s standard library in March 2024, a positive step towards greater transparency and community involvement. However, the core compiler itself remains closed source, though Modular has stated plans to open-source it once it reaches a certain level of maturity. As a result, this initial closed-source nature, coupled with its strong ties to Modular’s AI platform (MAX), has raised questions.

For example, some developers express concerns about “vendor lock-in,” questioning whether Mojo AI might become overly intertwined with Modular’s ecosystem. Others perceive Mojo AI‘s vision as “laser-focused solely on AI” and doubt its long-term general-purpose applicability beyond AI. Modular, therefore, needs to address these concerns and foster greater trust. This, in turn, will require their sustained commitment to open-sourcing critical components, as well as demonstrating its broad utility within the AI domain.

Enterprise Adoption and Integration

Securing enterprise adoption for a brand-new programming language is a formidable undertaking, requiring significant investment in training, infrastructure, and legacy code migration. Therefore, Mojo AI will need to consistently demonstrate its value proposition—namely, its superior performance, ease of use, and unified approach—to prove that such an investment yields substantial returns.

If Mojo AI remains too niche or presents significant integration hurdles with existing systems, its adoption rate might be sluggish. However, if it proves to be a clear and compelling answer to critical AI performance challenges, it could see rapid adoption as more organizations seek a competitive edge in the AI race.

Looking Ahead: The Promise for AI Development

In summary, Mojo AI represents a significant leap forward in AI programming. Its ability to optimize code for diverse hardware through MLIR is a major advantage, promising to streamline AI development and deployment like never before.

With its unified programming model and advanced features—including memory management, progressive typing, concurrency, and auto-tuning—Mojo AI emerges as a compelling solution for computationally intensive AI tasks. From accelerating data processing to enhancing model training and inference, Mojo AI promises to bring unprecedented levels of performance and efficiency to AI applications.

However, the journey ahead means navigating challenges related to its maturing ecosystem, addressing a potential learning curve, and managing its evolving open-source trajectory. Still, the core strengths of Mojo AI are undeniable. The robust community growth and remarkable performance benchmarks demonstrate that Mojo AI is more than just a fleeting trend; it’s a formidable contender poised to transform AI development. Ultimately, Mojo AI empowers developers to build faster, more efficient, and more expansive AI solutions, paving the way for the next generation of intelligent applications.

Mojo AI offers a clear and potent answer, poised to reshape the landscape for everyone building the future with AI.

What aspects of Mojo AI do you find most exciting, and what potential challenges do you foresee as it continues to evolve?