Optimizing your system starts with understanding Linux 617 Performance Benchmarks. This latest kernel iteration, therefore, delivers significant advancements. It specifically improves the CPU, various filesystems, and GPU-accelerated computing via AMD ROCm. Indeed, kernel 6.17 offers more than minor changes, redefining system responsiveness and data handling. Such updates are crucial for enthusiasts and enterprise professionals alike. Here, we analyze kernel 6.17’s performance benefits across diverse hardware, including OpenZFS. Moreover, we examine AMD’s ROCm impact and provide tools for precise benchmarking.

The Heart of the System: Linux 617 Performance Benchmarks Unpacked

The Linux kernel 6.17 is slated for release in late September 2025. This particular release marks a significant stride in kernel development, especially when considering its performance characteristics. Its primary aim is superior performance, while also targeting unwavering reliability and broader hardware compatibility. Consequently, development has focused intently on refining core hardware-software interactions. As a direct result, Linux kernel 6.17 testing promises measurable gains across diverse workloads, thereby enhancing user experience and setting new Linux benchmark results.

CPU Enhancements: Smarter Scheduling and Enhanced Stability in Linux 617 Performance Benchmarks

Linux 6.17 significantly boosts CPU performance, a key finding in initial kernel 6.17 analysis. This enhancement stems directly from AMD’s new Hardware Feedback Interface (HFI) driver. Indeed, this critical update greatly benefits AMD Ryzen processors, especially those with mixed-core designs that combine performance and efficiency cores. The HFI driver provides real-time feedback to the kernel’s scheduler, communicating core capabilities and workload types, leading to smarter task placement and improved overall system responsiveness.

Demanding applications will now automatically utilize high-performance cores, while background processes run efficiently on efficiency cores. This intelligent distribution creates a more responsive system, reflected in better Linux benchmark results. Users can also expect improved energy efficiency, ideal for laptops, and consistently high performance for desktop workloads, boosting overall Linux system performance.

Intel users also gain substantial advantages. Linux kernel 6.17 testing consistently enables multi-core (SMP) support, ensuring all available CPU cores are fully utilized and removing potential bottlenecks. This enhances Linux system performance. Additionally, updated Error Detection And Correction (EDAC) support significantly boosts system stability for newer Intel processors, vital for servers and workstations. EDAC actively corrects memory errors, preventing crashes and increasing reliability for robust system reliability.

Advancing Application Responsiveness in Linux kernel 6.17 testing

Linux 6.17 introduces experimental proxy execution support, representing an exciting initial development within Linux kernel 6.17 testing. This feature primarily aims to prevent application slowdowns by allowing high-priority tasks to proceed immediately, without waiting for lower-priority resource allocations.

However, this proxy execution is still in its early stages, yet it shows great promise for future enhancements to Linux system performance. It could accelerate application responsiveness even further and reduce latency in complex, multi-threaded environments. Furthermore, this technology will be highly relevant for applications characterized by frequent context switching and high resource contention. It ultimately hints at future kernel optimizations designed for the most demanding workloads, influencing future kernel evaluations.

Long-Term Kernel Optimization: Continuous Improvement Seen in Linux Benchmark Results

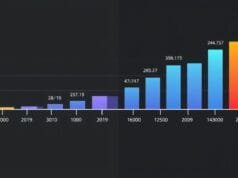

Kernel development clearly demonstrates a continuous trend of optimizing the Linux kernel, with general improvements undeniably evident from Linux 5.15 LTS to 6.17. Specifically, AMD EPYC processors show an impressive uplift of up to 37% in performance, a notable observation in Linux benchmark results.

This significant gain is not the result of a single feature, but a cumulative effect of numerous optimizations, including scheduler refinements, memory management improvements, better I/O handling, and enhanced hardware drivers. This consistent progress underscores the Linux community’s commitment to pushing performance boundaries in kernel 6.17 analysis. Therefore, each new kernel effectively acts as a more potent engine for your hardware. Thus, updating your Linux kernel is invaluable, as it unlocks more of your hardware’s potential over time, reflecting in better Linux system performance.

Filesystem Frontiers: Storage Benchmarks in Linux 617 Performance Benchmarks

Storage performance is often an unsung hero, yet absolutely crucial for a responsive system. A fast CPU can only process data as quickly as storage allows. Linux kernel 6.17 brings significant enhancements to key filesystems, directly translating to faster application loads and quicker data transfers. Overall system fluidity improves, especially on NVMe SSDs, as confirmed by these latest assessments.

EXT4: The Enduring Workhorse’s Boost in Linux 617 Performance Benchmarks

EXT4 remains the default Linux filesystem, widely adopted and continuously evolving. In Linux 6.17, it gains noticeable performance benefits, particularly true for I/O heavy tasks, thereby enhancing its already robust capabilities and improving Linux system performance.

These improvements stem from crucial block allocation enhancements. Efficient block allocation ensures quick assignment of contiguous blocks when a program writes data, minimizing fragmentation. It also reduces head seeks for HDDs or NAND cell operations for SSDs. For NVMe SSDs, this specifically means more efficient use of parallel I/O. Consequently, throughput is higher and latency is lower, particularly benefiting tasks like compiling large software or managing databases, as highlighted in recent performance testing.

Phoronix benchmarks further confirm these gains. This respected source lauded EXT4 on Linux 6.17, noting “extremely good” performance, especially evident for I/O intensive workloads on NVMe SSDs. It outperformed earlier kernels like 6.15 and 6.16, providing strong Linux benchmark results. Therefore, these core filesystem improvements have a tangible impact for many users.

Btrfs: Scaling for Large Files and Reduced Overhead in Linux System Performance

Btrfs is a copy-on-write (CoW) filesystem with advanced capabilities like snapshots and checksums. Linux 6.17 brings significant gains to Btrfs, with a main focus on handling large files more efficiently. Specifically, this is achieved through reduced memory overhead and improved throughput, which are critical for demanding operations and enhance overall Linux system performance.

A key innovation is the experimental large data folios. Folios manage memory pages within the kernel. Larger folios allow the kernel to handle bigger data chunks cohesively. For Btrfs, this means processing massive files—for instance, VM images or large video files—with fewer memory operations. This ultimately results in less CPU usage and faster data operations. Therefore, this notably benefits users working with multi-gigabyte files, improving performance and scalability for demanding storage, a clear positive in kernel 6.17 analysis.

EROFS: Optimized for Read-Heavy Operations in Linux kernel 6.17 testing

EROFS (Enhanced Read-Only File System) is designed primarily for read-only and embedded systems. It received significant upgrades in Linux 6.17. While often serving Live CDs, Android devices, or containers, its improvements nevertheless benefit any read-heavy workload, thus extending its utility and proving valuable in Linux kernel 6.17 testing.

Key enhancements include metadata compression and dramatically faster directory reads. Metadata compression reduces filesystem overhead, allowing more actual data on disk. More importantly, faster directory reads accelerate tasks involving listing many files or searching large hierarchies. Consequently, this translates to quicker boot times and snappier application launches, ultimately improving overall responsiveness and Linux system performance. For specific use cases, EROFS offers a compelling performance profile, especially where data integrity and consistent read performance are paramount.

OpenZFS on Linux 6.17: Configuration, Performance, and Best Practices for Linux 617 Performance Benchmarks

OpenZFS is a powerful filesystem known for robust data integrity, advanced snapshots, and scalability. However, its sophisticated architecture demands careful tuning for optimal Linux system performance on modern Linux systems, particularly with NVMe SSDs. Recent filesystem performance benchmarks included OpenZFS, alongside EXT4, Btrfs, F2FS, XFS, and Bcachefs. These notably highlighted a nuance: EXT4 and XFS often performed best in raw speed, whereas OpenZFS and Bcachefs sometimes showed varied or poorer Linux benchmark results without proper configuration.

The Criticality of OpenZFS Tuning for NVMe in kernel 6.17 analysis

OpenZFS’s default settings can significantly influence storage subsystem performance. They might not be optimally suited for contemporary NVMe storage; hence, specialized adjustments are often necessary. Specifically, the ashift value and recordsize parameters are paramount for achieving peak Linux system performance in kernel 6.17 analysis.

ashift(Block Size): This setting defines ZFS’s physical block size. Modern NVMe SSDs typically utilize 4KB sectors. Incorrectashift(e.g., 512-byte emulation on a 4KB native drive) forces inefficient read-modify-write cycles, creating significant overhead and impacting performance. For optimal NVMe performance, settingashift=12(4096 bytes) ensures ZFS writes align perfectly, minimizing unnecessary operations and maximizing throughput for improved Linux benchmark results.recordsize: This parameter sets the maximum size of ZFS data blocks. Arecordsizetoo small for large files creates unnecessary metadata overhead; too large for small files wastes space. While the default 128KB often suits general-purpose servers, adjusting this value can yield substantial gains for specific workloads like databases or virtual disk images. Benchmarking with tailoredrecordsizevalues is highly recommended for optimizing storage system output.

Advanced Tunables and Recent Optimizations for Linux System Performance

OpenZFS offers numerous tunables beyond ashift and recordsize that significantly impact Linux system performance. The zfs_dirty_data_max kernel tunable, for example, is crucial. It controls the maximum “dirty” data ZFS accumulates (modified in RAM but not yet written to disk). Buffering this data balances throughput and latency. Setting it too low can starve write operations, while setting it too high can cause large write bursts. Careful adjustment is essential, based on system RAM and workload.

OpenZFS developers continuously work to improve performance. Recent optimizations, for instance, merged in early 2023, include uncached prefetch work that significantly boosts sequential read speeds. This feature intelligently prefetches likely data, consequently reducing latency and boosting throughput for specific access patterns. Furthermore, these optimizations effectively cut CPU cycles during write operations, thereby freeing CPU resources for other tasks and enhancing overall system efficiency.

Essential Tools for ZFS Performance Monitoring in Linux Benchmark Results

Effectively identifying and resolving ZFS storage bottlenecks demands the right tools and consistent monitoring to analyze Linux benchmark results. For a quick, high-level overview of system-wide I/O activity, the Linux utility iostat provides real-time CPU and device I/O statistics. Moving closer to ZFS, zpool iostat offers detailed statistics specifically for ZFS pools, including read/write operations, bandwidth, and latency, invaluable for pinpointing pools or vdevs under heavy load.

For more dynamic and in-depth ZFS performance analysis, several specialized tools are essential. ztop is an interactive, top-like utility providing a real-time view of ZFS pool I/O, Adaptive Replacement Cache (ARC) statistics, and dataset activity, offering crucial insight into ZFS behavior. Further precision comes from ioztat, another ZFS-specific tool delivering even deeper statistics, such as latency histograms and granular I/O pattern insights, proving vital for comprehensive kernel 6.17 analysis.

Regularly monitoring these metrics is vital. Understanding their implications helps users proactively identify performance issues. Then, they can effectively adjust OpenZFS configurations to optimize Linux system performance, thus ensuring peak efficiency and better Linux benchmark results.

Accelerating with ROCm: GPU Computing & Indirect CPU Impact on Linux 617 Performance Benchmarks

Amazon FSx for OpenZFS impressively showcases its cloud capabilities. These services highlight the filesystem’s enterprise-grade features. Performance here depends heavily on deployment specifics and storage classes, with cloud offerings frequently utilizing ZFS features like data compression (e.g., Zstandard or LZ4) to improve effective throughput. Compression reduces the actual amount of data written and read, significantly boosting read-heavy workloads and saving storage costs. This enterprise adoption further validates OpenZFS’s robust design, confirming that proper configuration unlocks its full potential in high-performance scenarios.

The Indirect CPU Impact of Heterogeneous Computing on Linux System Performance in Linux kernel 6.17 testing

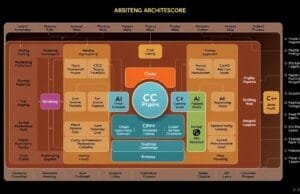

AMD’s ROCm platform (Radeon Open Compute) is rapidly emerging as a critical open-source software stack. It enables high-performance GPU computing on Linux, with a main mission to accelerate tasks on AMD GPUs, specifically assisting in areas such as AI, ML, and scientific simulations, as explored in Linux kernel 6.17 testing.

ROCm’s core focus is undeniably maximizing GPU performance. However, its seamless integration inevitably involves the CPU. The CPU orchestrates GPU tasks, thereby creating an indirect, yet crucial, impact that significantly affects overall Linux system performance and CPU testing methods in kernel 6.17 analysis.

Linux 617 Performance Benchmarks: Tools and Methodologies

ROCm offers a comprehensive software stack, including compilers, libraries, and various tools. Developers can harness parallel processing power on AMD GPUs. Its open-source nature makes it particularly attractive, standing as a powerful alternative to proprietary solutions like NVIDIA’s CUDA. Furthermore, this fosters innovation and offers greater transparency in development. The platform ensures seamless CPU-GPU integration, facilitating efficient data transfers and synchronized task execution, a hallmark of effective heterogeneous computing vital for assessing overall system performance.

The Indirect CPU Impact of Heterogeneous Computing

While ROCm advancements primarily focus on GPU acceleration, the CPU nevertheless plays a pivotal role. It orchestrates complex operations. In heterogeneous computing, the CPU functions as the host, managing memory, initiating GPU kernels, and transferring data between system RAM and GPU VRAM. Therefore, CPU bottlenecks can significantly impede ROCm application performance, frequently occurring in CPU-GPU communication or due to inefficient scheduling, impacting overall Linux system performance. Even a peak-efficiency GPU can suffer under such circumstances.

ROCm benchmarking tools effectively illuminate these interactions. The ROCm Validation Suite (RVS) includes modules focusing on comprehensive GPU compute capabilities, measuring FLOPS, memory bandwidth, and interconnect performance. Moreover, TransferBench specifically evaluates data transfer speeds, testing GPU-to-GPU and, critically, CPU-to-GPU communication, which is crucial for Linux kernel 6.17 testing.

Slowdowns in data movements implicitly involve the CPU, highlighting its ability to manage transfers efficiently. A CPU struggling with I/O or high PCIe latency can significantly limit overall performance, even with a powerful GPU. These ROCm-specific tests offer vital insights, revealing the CPU’s effectiveness for GPU workloads on Linux 6.17 and informing kernel 6.17 analysis.

Significant Strides in ROCm Performance

Recent ROCm versions demonstrate substantial performance uplifts. Specifically, ROCm 7 made remarkable gains for AI workloads, showing up to 3.5x inference improvement and a 3x increase in training throughput on AMD Instinct MI300X GPUs compared to ROCm 6. These gains stem from enhanced GPU utilization, optimized data movement, and refined software libraries that better leverage the hardware architecture, as seen in Linux benchmark results.

ROCm increasingly rivals NVIDIA’s CUDA, becoming a viable alternative for AI and HPC workloads. AMD’s advantage often includes larger VRAM, critical for massive AI models. Furthermore, the open ecosystem attracts developers seeking flexibility, contributing to the landscape of Linux system performance and enabling more options for in-depth performance evaluation.

Ongoing development ensures ROCm’s competitive edge. For instance, ROCm OpenCL performance is rigorously tested on AMD Ryzen AI Max+ “Strix Halo” systems running Linux 6.17, showing strong competition against Mesa’s Rusticl OpenCL driver. This indicates a vibrant development cycle, optimizing AMD’s entire Linux compute stack (integrated graphics and dedicated accelerators). Users can thus fully leverage their AMD hardware.

The Art of Measurement: Linux Benchmarking Tools and Methodologies

Accurately assessing Linux kernel performance, including CPU, filesystems, and GPU compute, is key. A structured approach with proper tools is paramount; however, raw numbers can often mislead. Context, consistency, and a thorough understanding of each benchmark are essential. This helps to draw meaningful and reliable conclusions for accurate performance assessments.

System-wide and CPU Benchmarking

Evaluating overall Linux system performance and CPU performance necessitates specific tools that generate diverse loads. Sysbench is a versatile suite for testing CPU integer and floating-point operations, memory access, I/O, and database performance, excellent for quick, targeted CPU assessments. Geekbench, a popular benchmark, measures CPU single-core and multi-core performance, often correlating with real-world application behavior and providing useful comparative scores, informing Linux benchmark results. Additionally, stress-ng rigorously stresses CPU, memory, I/O, and disk subsystems, proving invaluable for stability testing and ensuring system robustness under extreme loads.

For more comprehensive and automated kernel 6.17 analysis, the Phoronix Test Suite (PTS) stands as the most extensive benchmarking platform available for Linux. PTS automates the entire process, from test installation and execution to detailed result reporting. It offers thousands of tests encompassing CPU, GPU, storage, networking, and kernel performance, providing a robust framework for highly reproducible results. This makes PTS an indispensable tool for serious, in-depth performance analysis and comparative studies across various hardware configurations, essential for reliable system performance measurements.

Filesystem and Storage Benchmarking

For detailed storage performance insights in Linux kernel 6.17 testing, specific tools are crucial for simulating diverse I/O patterns. Fio (Flexible I/O Tester) stands as the industry standard, offering unparalleled flexibility and granular control. It allows custom read/write patterns, defining block sizes, queue depths, and I/O types to accurately measure IOPS, throughput, and latency, providing a deep understanding of filesystem performance. IOzone is another comprehensive benchmark tool that generates and measures a wide variety of file operations, yielding detailed metrics for different access patterns.

Beyond these comprehensive options, other tools offer targeted insights into Linux benchmark results. Bonnie++ remains a useful legacy benchmark, focusing on basic filesystem and disk performance by measuring data transfer rates for various file sizes. For quick, simple checks, the dd command (Data Duplicator) provides basic sequential read and write speeds, often used for initial sanity tests or raw block device performance assessment.

Best Practices for Effective Benchmarking

Meaningful and reproducible Linux benchmark results require adhering to best practices. Consider these:

- Consistent Testing Environment: Keep the test system isolated and consistent. Close unnecessary applications. Disable background services. Run tests on a freshly booted system. Temperature, for instance, can significantly impact results, especially for CPU-intensive tasks in system performance evaluations.

- Multiple Iterations and Averaging: Avoid relying on single benchmark runs. Performance often fluctuates due to system events, caching, or scheduler decisions. Run each benchmark multiple times (e.g., 3-5 times). Average the results to smooth out outliers, thus obtaining a more reliable measure of Linux system performance.

- Realistic Workloads: Micro-benchmarks isolate specific characteristics (e.g., single-core integer performance). However, general benchmarks mimic real-world workloads, such as compiling software or rendering video. These give a truer picture of the user experience. Balance both test types for a comprehensive view of Linux kernel 6.17 testing.

Advanced Benchmarking Principles

- Thorough Documentation: Document all aspects of your methodology. Include details on hardware (CPU, RAM, storage, kernel version), software versions, kernel tunables, benchmark settings, and environmental conditions. This ensures reproducibility and aids in accurate result interpretation, consequently making your findings more credible for kernel 6.17 analysis.

- Scalability and Comparability: Your methodology should allow for scalable results, showing how performance changes with increased load. Results should also be comparable to other systems for broader context. Relying on one benchmark alone can mislead, since it ignores complex component interactions. Therefore, a holistic view, combining various metrics and tests, offers the most accurate understanding of the system’s capabilities.

Conclusion

Linux kernel 6.17 undoubtedly marks a significant advancement, bringing noticeable performance enhancements across the board. Users will experience faster, more reliable, and responsive computing experiences. Key improvements include refined CPU scheduling, thanks to AMD’s new Hardware Feedback Interface (HFI) driver and better Intel support. Filesystem efficiency also sees substantial boosts, applying to EXT4, Btrfs, and EROFS alike. These consistent long-term gains across kernel versions highlight Linux’s robust development philosophy, as evidenced in recent kernel performance assessments.

Deep Dive into Performance Technologies

OpenZFS provides exceptional data integrity and advanced features. However, unlocking its full potential on modern NVMe storage requires careful tuning. Optimizing ashift and recordsize parameters is crucial, as improper configuration can cause even powerful filesystems to underperform significantly. Ongoing OpenZFS optimizations and robust monitoring tools further demonstrate a clear commitment to maximizing its performance capabilities for Linux system performance.

AMD’s ROCm platform is quickly becoming a strong force in Linux GPU computing. While its main focus is accelerating AI and machine learning, ROCm’s progress also underscores the CPU’s vital role in managing diverse workloads efficiently. ROCm 7 delivers significant performance gains. Its open ecosystem offers a powerful, competitive option for developers and researchers to effectively use AMD GPUs for demanding computational tasks, as evaluated in Linux kernel 6.17 testing.

Optimizing Linux system performance remains a continuous effort. Users unlock hardware potential by adopting the latest kernel releases like Linux 6.17, meticulously configuring filesystems like OpenZFS, and leveraging ROCm’s power. Rigorous benchmarking ensures peak performance and stability, driving a bright future for high-performance computing on Linux, as explored in kernel 6.17 analysis and Linux benchmark results.