Imagine you’re a data explorer, venturing into vast, unknown areas of information. To understand and map these areas, you need strong tools. In Python’s data analysis world, Pandas and NumPy are your essential compass and machete. These two libraries form the core of modern data science workflows. Pandas and NumPy help you gather, clean, transform, and analyze data with ease. Ultimately, they help you uncover key insights.

This article will show you the strengths of NumPy and Pandas. Initially, it will explain their seamless collaboration. Furthermore, it will show how to use their power together for effective data analysis. You’ll learn not just what Pandas and NumPy do, but also why they are so important. Moreover, you’ll discover how to leverage them effectively in real situations. Get ready to turn raw data into useful knowledge with Pandas and NumPy!

Understanding NumPy: Essential for Python Data Science

NumPy, short for Numerical Python, is the fundamental library for scientific computing in Python. Think of it as the powerful engine under the hood of your data analysis vehicle. Its primary function is to work with large, multi-dimensional arrays and matrices with high speed and efficiency. If your data is mostly numerical, especially if it’s very big and complex, NumPy is your best choice. Indeed, a strong understanding of NumPy is foundational for mastering data analysis tasks.

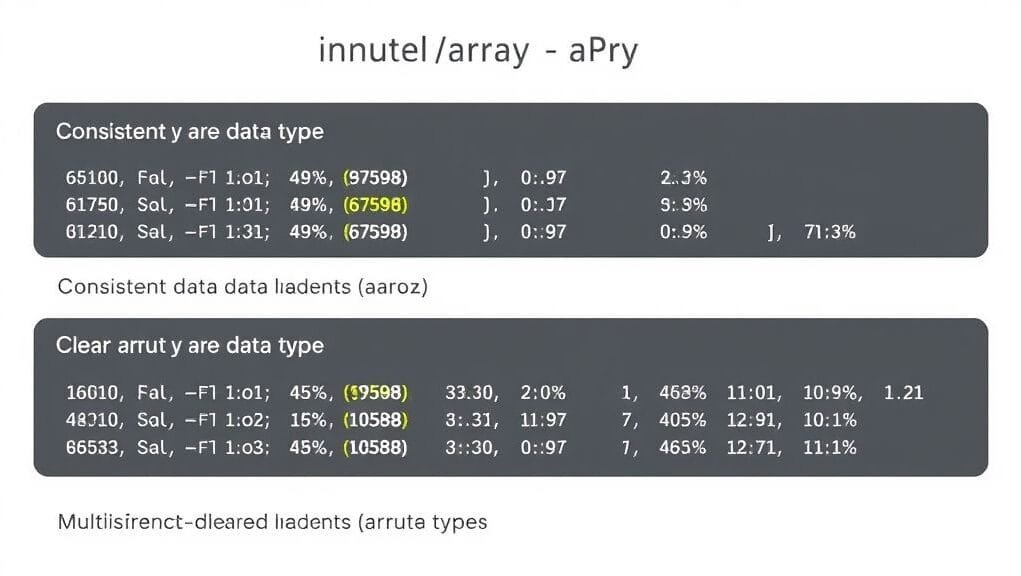

NumPy’s power comes from its core data structure: the `ndarray`. This `ndarray` is a multi-dimensional array. However, unlike standard Python lists, all elements inside a NumPy array must be of the same data type. This homogeneity makes it fast and saves memory. Additionally, much of NumPy is written in C. Consequently, this lets it do calculations much faster than Python can on its own.

NumPy Vectorization: Boosting Performance for Data Processing

One of NumPy’s most groundbreaking features is its support for vectorized operations. This simply means using mathematical functions on whole arrays at once. In essence, it eliminates the need for explicit Python loops. Imagine finding the square root of every number in a list of a million numbers. A standard Python loop would be slow and awkward. NumPy, however, can do this on the entire array in one very efficient step. Consequently, this ability is crucial for high-performance data manipulation.

Such vectorization greatly speeds up computation. Indeed, this speed increase becomes particularly evident with big datasets. It’s like having a special team that can work on a whole group of data at the same time, instead of one by one. Consequently, for tasks with complex mathematical problems, linear algebra, or Fourier transforms, NumPy is indispensable. Ultimately, it is the computational backbone for many [advanced machine learning and AI uses](https://www.simplilearn.com/tutorials/python-tutorial/python-libraries-for-data-science).

NumPy’s Core Components for Scientific Computing

NumPy arrays are very flexible. They are great for numerical tasks. For example, these tasks include generating numerical sequences and reshaping data for specific algorithms. Furthermore, they facilitate complex data analyses, such as calculating averages, medians, standard deviations, and correlations across massive datasets. Furthermore, many other scientific and data analysis libraries in Python, including Pandas itself, are built directly on top of NumPy. They often take NumPy arrays as input or internally convert their data structures into NumPy arrays for hidden calculations.

Therefore, understanding NumPy is not just about mastering a single library. Instead, it means understanding the fundamental numerical computation paradigm that supports most of Python’s data science world. Essentially, it’s the robust foundation for more advanced data structures and analysis tools, including Pandas itself.

Pandas: Efficient Data Handling for Analysis

If NumPy is the powerful engine, then Pandas is the easy-to-use control panel and comfy cabin of your data analysis vehicle. Pandas is a high-level Python library made for working with and analyzing data. In fact, this also makes it very easy to use for structured, tabular data. Pandas is great while NumPy excels with purely numerical data, thus offering tools to handle the diverse complexities of your data. Ultimately, this intimate connection explains why Pandas and NumPy work so well together.

Pandas introduces two main data structures: the Series and the DataFrame. A Series is like a single column of data, a one-dimensional, labeled array. Conversely, a DataFrame is a two-dimensional, table-like data structure. Furthermore, it’s much like a spreadsheet or a SQL table. Indeed, with labeled rows and columns, DataFrames allow you to organize and manipulate your data intuitively. Therefore, this structure makes Pandas perfect for real data sources such as CSV files, Excel spreadsheets, or database tables. These varied sources often benefit from NumPy’s underlying computational efficiency.

Pandas DataFrames: The Core for Structured Data Analysis

DataFrames are key to Pandas. They can work with different data types in different columns. Specifically, one column might contain text, another numbers, and yet another dates. Consequently, this natural flexibility is key for real-world datasets. These datasets are rarely exclusively numerical. Moreover, Pandas also offers a rich set of functionalities for working with these DataFrames. For instance, you can easily read data from various file formats, check its structure, and inspect its contents.

Consider a dataset of customer information. For example, it might include names (text), ages (numbers), and purchase dates (datetime objects). A Pandas DataFrame can easily store and manage all these different types of data together. Moreover, its labeled axes (row and column names) make accessing and interpreting your data straightforward. Thus, when working with structured data, Pandas and NumPy provide a comprehensive solution.

Simplifying Data Wrangling with Pandas Libraries

One of Pandas’ greatest strengths is its comprehensive suite of functions for data wrangling. Data wrangling (also called data cleaning or data prep) is a key step. In other words, it transforms raw, untidy data into a clean, usable format for analysis or machine learning. Indeed, Pandas offers powerful, intuitive methods for:

- Handling Missing Values: Datasets often have gaps. For instance, Pandas helps you find these missing values (shown as `NaN` – Not a Number, from NumPy). Alternatively, you can fill them with a value (like the average using Pandas functions, which use NumPy for calculations) or remove rows or columns with a significant number of missing entries.

- Data Cleaning: Finding and fixing errors, inconsistencies, or anomalies.

- Transformation: Changing data types, applying functions to columns, or creating new features.

- Filtering and Selection: Quickly selecting specific rows or columns based on rules or names.

- Sorting and Aggregating: Arranging data by values or grouping data to find totals like sums, averages, or counts.

- Merging and Joining: Combining multiple datasets based on common keys, much like SQL joins.

- Reshaping: Pivoting or unpivoting data to change its layout.

These capabilities make Pandas an invaluable tool for any data professional. Indeed, it makes tasks easier that would be laborious and time-consuming using basic Python or even raw NumPy arrays alone. Ultimately, Pandas makes it much easier to prepare your data for analysis or visualization, often by using NumPy’s underlying computational power.

The Indispensable Partnership: Pandas and NumPy

While NumPy and Pandas have different primary objectives, their true power emerges when you use them together. In fact, they are not rivals; rather, they are complementary tools. They form a robust, integrated data analysis system in Python. Indeed, Pandas is built directly on top of NumPy. This means that DataFrames and Series internally store their data using NumPy arrays. Therefore, when you perform operations in Pandas, you are often using NumPy’s high-speed numerical capabilities without realizing it. This teamwork underscores the strength of Pandas and NumPy.

Think of it this way: Pandas gives you the structured, labeled data environment. Specifically, it also provides the intuitive methods to manipulate it. Meanwhile, NumPy provides the raw, fast computing power. Thus, this power executes these manipulations rapidly, especially when working with numerical data inside your DataFrames. This teamwork is fundamental to high-performance data science in Python. It’s the primary mechanism Pandas and NumPy get results.

The Symbiotic Relationship of Pandas and NumPy

You might wonder when to use which. To clarify, generally, you’ll start with Pandas for structured data manipulation, cleaning, and exploration. Pandas allows you to load different file types, check data, handle missing values, filter rows, and group data with ease. However, when doing high-speed numerical computations, NumPy often takes the lead. This is especially true for homogeneous blocks of data within your DataFrame. This shows their synergistic operational approach.

For example, suppose you use a complex mathematical function on a column of numbers in a Pandas DataFrame. Pandas will likely internally convert this column into a NumPy array. This lets it do the math at NumPy’s faster speed. Alternatively, consider extracting a specific numerical column as a NumPy array for a specialized scientific computation. The results can then be easily added back into your DataFrame. Ultimately, such a seamless interchange makes data workflows using Pandas and NumPy both robust and adaptable.

Optimizing Performance and Memory in Pandas and NumPy Workflows

Understanding the interplay between both libraries is key for efficient data analysis, especially with very large datasets. NumPy generally offers better performance and less memory use for datasets that are purely numerical and homogeneous in type. NumPy arrays store homogeneous items compactly. In turn, this helps them perform more efficiently and use less memory. Pandas DataFrames, however, are more flexible with different column types. This naturally incurs slightly more memory overhead.

However, the perceived “better performance” depends heavily on the specific operation and the size of your data. Here’s a general guideline to consider for Pandas and NumPy:

| Characteristic | NumPy | Pandas |

|---|---|---|

| Primary Use | Numerical computing, scientific tasks | Structured data manipulation, analysis |

| Data Structure | Homogeneous `ndarrays` | Heterogeneous `DataFrames`, `Series` |

| Memory Efficiency | Generally higher (compact storage) | Generally lower (more overhead) |

| Best Performance | Smaller numerical datasets (e.g., <50,000 rows, depending on operation) | Larger structured datasets (e.g., >=500,000 rows, depending on operation) |

| Ease of Use | Lower level, requires more manual operations | Higher level, intuitive methods |

It’s important to remember that these are general points. For very large datasets, Pandas often works better than manual Python loops on raw NumPy arrays. This is because it leverages optimized C-based implementations. Conversely, for very simple, direct numerical tasks on small, homogeneous arrays, NumPy might be a bit better. Therefore, the key is to use the strengths of each. Specifically, use Pandas for overall data organization and routine cleaning tasks. Let NumPy handle the intensive numerical computations hidden inside, or when you specifically need its array-focused features. This dual-faceted approach maximizes the benefits of Pandas and NumPy.

Real-World Applications of Pandas and NumPy

The combined power of Pandas and NumPy makes them indispensable for a wide range of data analysis tasks. Indeed, these are your go-to tools. They help you turn raw data into actionable insights, prepare it for visualization, and feed it into machine learning models. Therefore, let’s explore some key applications where these libraries truly excel.

Exploratory Data Analysis with Pandas and NumPy

Exploratory Data Analysis (EDA) is the key first step in any data project. Essentially, it means summarizing the main characteristics of a dataset. Typically, this is often done with charts and graphs. The goal is to understand patterns, find anomalies, and formulate hypotheses. In this context, both Pandas and NumPy play pivotal roles here.

With Pandas, you can effortlessly:

- Calculate basic stats like averages, medians, modes, standard deviations, and quartiles for all numerical columns. You can use a single `.describe()` method for this.

- Count unique values in a column (`.value_counts()`) to understand how categories are spread.

- Filter data to explore specific subsets that might show interesting trends.

- Find relationships between different columns using correlation methods.

NumPy, meanwhile, provides the underlying mathematical functions that Pandas uses for these statistical summaries. If you need to do more complex or specialized statistical calculations directly on arrays, NumPy’s many statistical functions are ready for you to use. Thus, together, Pandas and NumPy allow you to quickly derive answers to specific questions. Ultimately, they do this by analyzing data patterns and summaries, which helps your more in-depth analysis.

Data Preparation using Pandas and NumPy

Data cleaning and preprocessing are often the longest but most important steps in data analysis. Messy data, after all, leads to inaccurate conclusions. Fortunately, Pandas and NumPy offer robust tools to make this process easier.

- Handling Missing Values: Datasets often have gaps. For instance, Pandas helps you find these missing values (shown as `NaN` – Not a Number, from NumPy). Alternatively, you can fill them with a value (like the average using Pandas functions, which use NumPy for calculations) or remove rows or columns with a significant number of missing entries.

- Outlier Detection and Transformation: You might use mathematical methods (using NumPy) to find extreme values (outliers). These could skew your analysis. Consequently, Pandas then lets you easily remove these or transform them. This teamwork between Pandas and NumPy ensures high data integrity.

- Furthermore, Pandas makes it easier to transform data into the appropriate format. This includes turning a column of text into numbers or dates.

This careful prep makes sure your data is clean, consistent, and ready for advanced models or visualization. Consequently, it’s like preparing a fancy meal; even the best chef needs good, ready ingredients.

Advanced Data Applications for Pandas and NumPy

The usefulness of Pandas and NumPy goes far beyond basic cleaning and summarization. Indeed, their applications are vast.

- First, finding measures of central tendency (average, median), spread (standard deviation, variance), and correlations is simple. NumPy provides the core functionalities. Meanwhile, Pandas makes these calculations readily accessible and applicable on structured data.

- Time Series Analysis: Pandas is exceptionally proficient with time-series data. It offers dedicated functionalities for dates and times. It helps with changing data frequency (like turning daily data into weekly averages), finding rolling stats, and analyzing temporal patterns. Therefore, this makes it very useful for financial data, sensor readings, or any dataset where time is a critical factor.

- Integration with Other Libraries: Both Pandas and NumPy are foundational to the entire Python data science ecosystem. They work seamlessly with popular charting tools like Matplotlib and Seaborn. Consequently, this lets you make compelling visualizations directly from DataFrames. More importantly, they are the primary data structures expected by machine learning systems such as Scikit-learn, TensorFlow, and PyTorch. Your cleaned and ready Pandas DataFrames or raw NumPy arrays are the perfect input for building robust predictive models. Ultimately, they truly demonstrate the versatility of Pandas and NumPy.

Mastering Your Workflow: Best Practices for Pandas and NumPy Efficiency

To truly harness the power of Pandas and NumPy, it’s not enough to know what they do. Instead, you must also understand how to leverage them effectively. In fact, using sound practices in your work will ensure your data analysis is fast, trustworthy, and scalable. Ultimately, these methods are applicable across all Pandas and NumPy projects.

Strategic Tool Selection: When to Use Pandas vs. NumPy

Indeed, the first best practice is to always choose the right tool for the job.

- For high-level data manipulation, cleaning, and grouping of structured data, always lean on Pandas. Its DataFrame structure and extensive suite of tools are specifically designed for these tasks. Trying to replicate these tasks with raw NumPy arrays would be much harder and more arduous and error-prone. Thus, this fundamental synergy is a primary reason for the partnership between Pandas and NumPy.

- For direct numerical computations, especially on large, homogeneous arrays, NumPy is your champion. If you need to do matrix multiplications, Fourier transforms, or element-wise mathematical operations on a whole array, turn to NumPy. Its vectorized functions provide the best performance. Moreover, it shows the core strength of NumPy within the system.

- Finally, use their seamless integration. Often, you’ll start with Pandas for loading and initial exploration. Then, you might extract a specific column or part of your DataFrame as a NumPy array (e.g., `df[‘columnname’].values`) for a specialized numerical algorithm that needs a raw array. Afterward, you can easily add the results back into your DataFrame. This fluid workflow is a sign of proficient Pandas and NumPy application.

Maximizing Efficiency in Pandas and NumPy Projects

Efficiency goes beyond just speed. Rather, it also involves writing clear, maintainable code.

- Embrace Vectorization (NumPy’s Strength): Avoid explicit Python loops when a vectorized NumPy or Pandas operation can do the same thing. This is perhaps the most important rule for speed when working with Pandas and NumPy. Indeed, instead of iterating through a DataFrame to multiply values, use `df[‘column’] * 2`.

- Handle Missing Data Thoughtfully: Don’t just remove all rows with `NaN` values indiscriminately. Rather, evaluate the impact. Instead, sometimes, filling with an average, median value, or even an intelligent imputation method is better. Pandas provides excellent tools for this, often using NumPy functions.

- Optimize Memory Usage: For very large datasets, pay attention to data types. Pandas allows you to downcast numerical columns (e.g., from `float64` to `float32` or `int16`) if the values allow. This greatly reduces memory use. Also, consider using category data types for columns with few unique text values. Evidently, such optimization is critical for large-scale Pandas and NumPy projects.

- Chain Operations: Pandas allows you to chain multiple operations together in a single line. This makes your code shorter and often easier to read. Consequently, for example: `df.dropna().groupby(‘category’).mean()`.

- If you find performance bottlenecks, profile your code. Use Python’s built-in `timeit` module or other tools to pinpoint the exact sources of slowdown. Specifically, this will help you tell if a NumPy or Pandas operation requires optimization. Ultimately, this will lead to more efficient code.

By using these best practices, you won’t just do data analysis. Instead, you’ll do efficient and effective data analysis. In turn, this will help you make robust solutions that can address real-world problems with Pandas and NumPy.

Conclusion: Mastering Data Science with Pandas and NumPy

In the vast landscape of data science, Pandas and NumPy stand as foundational pillars. Each offers distinct yet complementary strengths. Specifically, NumPy provides the raw computing power. It excels at high-speed numerical computations on homogeneous arrays. It is truly a workhorse for scientific computing. Pandas, on the other hand, offers a user-friendly and highly flexible framework for structured data manipulation. It makes the complex task of data cleaning, transformation, and analysis remarkably accessible. Together, Pandas and NumPy form a formidable partnership.

Pandas uses NumPy’s speed for its underlying operations. Meanwhile, NumPy’s capabilities are made more accessible and organized through Pandas’ DataFrames. This powerful synergy lets data experts like you quickly process, clean, and analyze diverse datasets. Ultimately, this leads to uncovering profound insights. This greatly streamlines and enhances every step of the data science workflow. Mastering these two libraries, Pandas and NumPy, is not just about acquiring tools. Instead, it’s about gaining a fundamental fluency in the language of data.

What is the hardest data analysis problem you’ve faced where Pandas and NumPy were very important? Share your experiences and thoughts below!