Have you ever thought about the quiet hero that makes so many modern advancements possible? This hero powers stunning visuals on your screen, rapid AI progress, and rigorous scientific simulations that push the limits of what we know. This unsung hero is the Graphics Processing Unit, or GPU. The evolution of the GPU started as a humble component, solely tasked with drawing pixels. Today, it stands as a vital, all-purpose parallel processor. This journey showcases the remarkable evolution of the GPU.

Imagine a world where your computer’s main brain, the Central Processing Unit (CPU), had to slowly handle every visual detail and difficult math problem. Such a scenario would undeniably be slow, cumbersome, and deeply frustrating. Fortunately, the GPU stepped in, progressively taking on more and more of this demanding work. Indeed, the evolution of the GPU is a fascinating story of its specialization. This journey has, moreover, unlocked incredible versatility, fundamentally transforming modern computing.

The Dawn of Digital Graphics: Early Visions and Specialized Circuits

The journey of the GPU goes back further than many people think. It originated with the earliest attempts to display data on screens. These initial efforts thus built the foundation for the digital displays we use today. Gradually, they paved the way for more engaging and detailed visuals. This marked the start of the evolution of the GPU. The steady advancements in these early systems laid the crucial groundwork for the future evolution of the GPU.

From Whirlwind to Arcade Screens (1950s-1970s)

The first ideas for graphics processing began in the 1950s. For instance, MIT’s Whirlwind computer, originally designed for flight training, demonstrated the capability to display simple 3D graphics. This early work consequently proved computers could generate and display images. While rudimentary by today’s standards, these images represented a significant breakthrough. Clearly, this was an early step in the evolution of the GPU.

As technology advanced, specialized graphics components began to emerge in the 1970s. These were often found within arcade game machines, powering early video games with their simple yet engaging visuals. RCA’s “Pixie” video chip, launched in 1976, is a good example. Moreover, this chip offered fundamental video output capabilities. Thus, it laid the groundwork for subsequent advancements in graphics displays. This helped the early evolution of the GPU for home systems. Indeed, understanding the Pixie’s role helps us grasp the initial steps in the evolution of the GPU.

The PC Arrives: 2D Text and Basic Images (1980s)

The introduction of personal computers in the 1980s marked a significant leap forward. It democratized access to graphics for a wider audience. This era, therefore, proved vital for the early evolution of the GPU tailored for everyday users. For example, IBM’s Monochrome Display Adapter (MDA) and Color Graphics Adapter (CGA) cards, launched in 1981, were pivotal. These early adapters, furthermore, delivered text and fundamental 2D images to both home and office environments. Their primary focus was on business applications and rudimentary software.

However, these early graphics cards possessed notable limitations. Specifically, they offered low screen resolution and a restricted color palette. Consequently, demanding visual tasks remained challenging to execute. While these cards offered a glimpse into digital interaction, they certainly were not designed for the vibrant, dynamic visuals we expect today. At this stage, for instance, the computing world largely remained confined to 2D. Yet, the desire for enhanced graphics intensified. This impetus propelled the next phase in the evolution of the GPU.

The 3D Revolution: Gaming Ignites Innovation

Gaming served as the catalyst for the growth of the modern GPU. People wanted better entertainment. Indeed, the burgeoning video game industry of the 1990s demanded more than simple 2D images. It yearned for immersive, three-dimensional worlds. This burgeoning demand, consequently, accelerated the pace of graphics technology development. This period of rapid advancement was a pivotal phase in the evolution of the GPU.

The Rise of Dedicated Graphics (1990s)

Prior to dedicated 3D graphics cards, the computer’s Central Processing Unit (CPU) struggled to render complex 3D scenes effectively. In essence, the CPU was not architected for the immense number of mathematical calculations required to render geometries, apply textures, and illuminate objects in real-time gaming environments. These all happen instantly. This limitation, as a result, meant early 3D games often appeared rudimentary, suffered from slow performance, or both. Therefore, a specialized hardware device became critically necessary.

This is where companies like 3dfx Interactive intervened, fundamentally changing the landscape with their Voodoo Graphics card in 1996. The Voodoo card, in fact, represented a monumental breakthrough for consumers. It made high-performance 3D graphics accessible to the mainstream. As a result, it enabled games to run far more smoothly and appear significantly more visually appealing. It also offered an immersive gaming experience previously unseen on home computers. Its impact was immediate and profound. Clearly, this marked a crucial step in the evolution of the GPU.

The Voodoo card rapidly established 3dfx as a dominant early player in the nascent 3D graphics market. Consequently, gamers eagerly upgraded their computers. They sought to experience the next frontier of graphical fidelity. This intense competition among manufacturers, moreover, dramatically pushed the boundaries of what was technologically feasible. It also rapidly improved chip design and graphics rendering techniques. As a result, the foundational architecture for today’s advanced GPUs was firmly established. This propelled the rapid evolution of the GPU forward.

Defining the GPU: NVIDIA’s Vision and Architectural Leaps

The late 1990s and early 2000s witnessed a consolidation and maturation of the graphics market. This period, moreover, solidified a clear conceptualization of what a true “Graphics Processing Unit” (GPU) entailed. Crucially, this era was not solely about achieving faster performance. Instead, it focused on integrating more functions onto a single chip. This integration rendered the GPU an even more powerful and autonomous component in the ongoing evolution of the GPU.

Coining the Term (Late 1990s)

The term “GPU” itself gained widespread recognition through NVIDIA in 1999. This coincided with the launch of their groundbreaking GeForce 256. NVIDIA defined a GPU as a single chip. It integrated engines for transform, lighting, triangle setup/clipping, and rendering. This definition, therefore, signified a pivotal shift. This affected the ongoing evolution of the GPU. The GeForce 256 represented a monumental leap. It autonomously handled a greater portion of the graphics pipeline. Previously, the CPU performed many of these tasks. This often resulted in performance bottlenecks. The GeForce 256 consolidated these tasks onto a single chip. This significantly reduced the CPU’s workload. Consequently, this enabled vastly more complex and fluid 3D graphics. Moreover, it boasted the capability to process over 10 million polygons per second. An unprecedented feat at the time. Ultimately, this marked a significant point in the evolution of the GPU.

The Era of Programmable Shaders (Early 2000s)

Initially, GPUs relied on “fixed-function hardware.” This implied that specific components of the chip were dedicated to singular, pre-defined tasks. For instance, they handled how light interacted with an object (lighting). They also determined the color of each pixel. While effective for basic tasks, this method offered limited flexibility. Developers, however, sought to create novel and distinctive visual effects.

A pivotal transformation, however, arrived in the early 2000s. It marked the advent of programmable shaders. This innovative concept profoundly altered the process of graphics rendering. Moreover, it granted artists and developers unprecedented creative control. For example, Microsoft’s DirectX 8.0 (2000) and subsequent OpenGL updates provided the necessary software interfaces for these new capabilities. These tools, in turn, allowed developers to write small, specialized programs known as “shaders.” Such programs executed directly on the GPU, dictating how it processed vertices and pixels.

NVIDIA’s GeForce 3, launched in 2001, stood out as a significant early GPU for consumers. It was notably equipped with programmable pixel shaders. This meant a customized program could execute for each individual pixel. Previously, a fixed rule determined its color, but now programs offered vastly greater flexibility. Clearly, this was a huge jump in the evolution of the GPU. As a result, developers could craft far more intricate lighting, shadow, and texture effects. Ultimately, this enhanced the realism of video games and other graphical applications considerably.

Unifying the Pipeline: A Game-Changer (Mid-2000s)

The evolution of shaders continued, culminating in another significant architectural advancement: the unified shader architecture. NVIDIA’s GeForce 8 series, featuring its groundbreaking G80 chip, pioneered this new design in 2006. This design eliminated the distinction between different types of shader units. Previously, GPUs featured distinct hardware units. Vertex shaders processed geometric shapes, while pixel shaders determined surface appearance.

With a unified architecture, all shader units on the GPU could be dynamically allocated to any type of computational task. This, for instance, encompassed vertex, geometry, or pixel processing. This innovative paradigm, therefore, vastly enhanced the GPU’s efficiency and flexibility. Vertex shaders no longer remained idle while pixel shaders were actively engaged. The GPU could dynamically share its processing power as required. This, in turn, ensured maximal utilization of all its components. For developers, this translated into greater freedom to optimize their graphics code. It also empowered them to create even more sophisticated visual effects. This truly revolutionized the industry and profoundly influenced the evolution of the GPU.

Beyond Pixels: The General-Purpose GPU Takes Center Stage

While the GPU was meticulously perfecting digital worlds, a subtle yet profound transformation was brewing. Specifically, researchers began to discern a crucial characteristic of the GPU’s design: its immense parallel processing capability. This realization, moreover, would propel the GPU far beyond its initial purpose. It ushered in the era of General-Purpose computing on Graphics Processing Units (GPGPU). This marked another key phase in the evolution of the GPU.

Discovering Parallel Power (Mid-2000s)

GPUs inherently performed numerous calculations concurrently. This held true for both fixed-function and later programmable architectures. Consider the task of rendering millions of pixels on a screen. A CPU, akin to a single highly skilled artist, renders them sequentially, albeit very quickly. A GPU, however, operates like thousands of artists. Each simultaneously paints their own small section. This, naturally, accelerates the entire process significantly. This inherent parallel architecture, featuring thousands of cores, garnered the attention of scientists and engineers.

These researchers recognized that the GPU’s capacity for concurrent computation extended far beyond graphics. Crucially, it proved ideal for any problem that could be decomposed into numerous small, independent tasks. Suddenly, therefore, the GPU’s utility expanded far beyond merely generating visual effects. This growth was a key part of the evolution of the GPU. Indeed, its specialized architecture could significantly accelerate scientific computations, data analysis, and numerous other computationally intensive problems. Problems that had previously bottlenecked CPU-based systems.

The CUDA Breakthrough and OpenCL (2006-2009)

The potential for GPUs to perform diverse tasks was clear. However, a significant hurdle remained. Specifically, programming these devices for non-graphics applications proved exceedingly difficult. Existing graphics APIs like DirectX and OpenGL were inherently designed for rendering. They were not suited for scientific simulations. Then, NVIDIA introduced a monumental breakthrough in 2006. They brought out CUDA (Compute Unified Device Architecture).

CUDA provided a comprehensive development environment. For example, it enabled developers to write parallel code specifically for NVIDIA GPUs. They used common languages like C, C++, and Fortran. This was a critical juncture. It democratized GPGPU computing, making it accessible to a broader audience. Suddenly, therefore, a wider array of developers could harness the immense power of GPUs. They leveraged them for a multitude of non-graphics applications. This extended beyond the realm of graphics experts. In essence, it truly catalyzed a wave of new innovation. This greatly shaped the evolution of the GPU.

Following CUDA’s success, the Khronos Group launched OpenCL (Open Computing Language) in 2009. OpenCL, importantly, provided a more vendor-agnostic framework. It was designed to run across diverse hardware platforms. This included GPUs from different makers (like AMD and Intel) and CPUs. This new standard offered developers a more open and unified solution for general-purpose parallel programming. Consequently, it accelerated the adoption of GPGPU technology across a wide range of computing systems. It also drove the evolution of the GPU.

Reshaping Industries: The GPU’s Impact Across Modern Computing

The GPGPU revolution profoundly transformed the landscape of computing. Indeed, GPUs are no longer confined solely to gaming or media applications. Instead, they have emerged as indispensable accelerators. They power some of the most challenging and transformative fields in the modern world. Their unparalleled parallel processing strength, moreover, has unlocked possibilities once deemed impossible or prohibitively expensive to compute. This remarkable capability is a testament to the ongoing evolution of the GPU, showcasing a significant shift in its applications.

Powering the AI Revolution

GPGPU computing has most dramatically reshaped the fields of AI and machine learning. Indeed, GPUs are widely recognized as the primary engine driving AI advancement. Training deep neural networks, for instance, necessitates an immense volume of parallel mathematical operations. This is exactly what GPUs do best.

Without GPUs, breakthroughs in image recognition, natural language processing, and real-time inference would be significantly hindered, perhaps even stalled. Recognizing this, companies like NVIDIA have strategically focused on optimizing GPUs for AI workloads. Furthermore, they have integrated specialized hardware like Tensor Cores. These dedicated units, therefore, significantly accelerate the mixed-precision arithmetic commonly employed in deep learning. This solidifies the GPU’s pivotal role in the AI revolution. It also shapes the future evolution of the GPU.

Accelerating Scientific Discovery

GPUs have also profoundly transformed scientific research and high-performance computing (HPC). Scientists can now execute complex simulations at speeds vastly exceeding those achievable with traditional CPUs. This acceleration, moreover, is crucial for fields such as physics, astrophysics, biology, and climate science.

For example, GPUs enable the modeling of complex molecular dynamics. They facilitate more precise climate modeling. Or they allow for the exploration of the vast cosmos. By rapidly processing massive datasets and executing intricate computations, scientists can validate hypotheses more quickly. Additionally, they can uncover novel insights. Ultimately, this accelerates the pace of discovery across virtually all scientific disciplines. It exemplifies the far-reaching ramifications of the evolution of the GPU.

Unlocking Data Insights

In our data-intensive world, the rapid processing and analysis of vast amounts of information are paramount. Fortunately, GPUs provide a powerful solution to this challenge. Their parallel architecture, in fact, significantly accelerates the manipulation and visualization of large datasets. This, therefore, proves invaluable for data professionals.

GPUs expedite data analysis. They achieve this, for example, by accelerating tasks such as database queries, complex statistical analyses, and interactive data visualizations. This means businesses and researchers, as a result, can derive actionable insights from their data more rapidly. Such speed, in turn, facilitates quicker decision-making and a more profound understanding of complex trends. This is thanks to the ongoing evolution of the GPU.

Driving Digital Economies

GPUs have also, remarkably, fostered the growth of nascent digital economies, extending beyond science and traditional business. For example, cryptocurrency mining emerged as a prominent application. The evolution of the GPU rendered it exceptionally well-suited for these computationally intensive tasks. GPUs excel at complex cryptographic computations. Consequently, they were widely adopted for mining various digital currencies. This demand, therefore, spurred significant market fluctuations and periods of supply shortages. It also highlighted the GPU’s unforeseen influence in financial technology. These evolving demands continue to shape the evolution of the GPU.

Furthermore, GPUs are increasingly being deployed in cloud computing environments. Specifically, cloud providers offer high-performance GPU services. These allow users to access powerful computing capabilities without the need for significant capital investment in hardware. This optimizes the execution of computationally demanding tasks. The GPU’s advancement has reduced operational costs for businesses. It has also enabled seamless scaling of processing power. This makes advanced computing more accessible than ever before. For instance, a small startup can rent GPU capacity for AI training, eliminating the need to purchase expensive proprietary hardware.

The Titans of the GPU Market: Key Players and Growth Trends

The GPU market, once a battleground for numerous contenders, has largely consolidated around a few dominant players. These companies, crucially, not only drive innovation. They also dictate the trends and supply of graphics and compute power globally. The market has experienced substantial growth, particularly in recent times. This underscores the GPU’s increasing importance across a multitude of sectors. This trend is largely due to its ongoing evolution.

Dominant Forces: NVIDIA, AMD, and Intel

In the discrete GPU market, two firms stand as clear leaders: NVIDIA and AMD. NVIDIA, as is widely known, was instrumental in shaping and advancing the GPU. AMD, meanwhile, significantly strengthened its position by acquiring ATI Technologies in 2006. This acquisition, therefore, integrated another major player into its portfolio. These two firms, moreover, have consistently pushed the boundaries of GPU technology, often amidst intense rivalry.

While NVIDIA and AMD currently dominate, Intel has also entered the discrete GPU market. It currently holds a smaller market share. Intel’s entry, therefore, could potentially alter the competitive landscape. It might provide increased choices for consumers and enterprises. However, for now, NVIDIA and AMD still hold most of the market.

A Soaring Market: Current and Future Projections

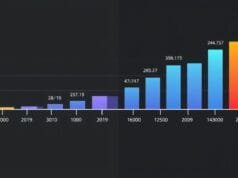

Market data unequivocally reveals a clear trend: the GPU industry is experiencing exponential growth. This growth, moreover, stems from a burgeoning demand for high-performance computing. To illustrate this, let’s examine some recent projections:

| Metric | 2023 Value | 2024 Value | Projected 2030 Value | Projected 2033 Value | Projected 2034 Value |

|---|---|---|---|---|---|

| Global GPU Market Size | USD 52.1 billion | USD 66.4 billion | USD 237.50 billion | USD 404.9 billion | N/A |

| CAGR (2024-2030/33) | N/A | N/A | 22.58% | 22.24% | N/A |

| Data Center GPU Market | N/A | N/A | N/A | N/A | USD 192.68 billion |

| CAGR Data Center (2025-2034) | N/A | N/A | N/A | N/A | 27.52% |

These figures are indeed staggering. In fact, the global GPU market was valued at USD 66.4 billion in 2024. It is projected to surpass USD 200 billion by 2030. Some estimates suggest it will approach USD 405 billion by 2033. This consistently high Compound Annual Growth Rate (CAGR), therefore, underscores the GPU’s critical importance in the global economy.

By Q2 2025, NVIDIA commanded a significant 94% share of the add-in board (AIB) GPU market. This dominance stems from NVIDIA’s strategic investments in AI, machine learning, and data center infrastructure. It also benefits from the widespread availability and robust support for its GPUs (e.g., CUDA). Meanwhile, AMD holds about 6%, and Intel less than 1%. While gaming constituted approximately 37% of the GPU market in 2023, servers and data center accelerators are experiencing the fastest growth. These are primarily driven by the immense computational demands of generative AI training. This shift, therefore, indicates a significant redirection in the primary applications of GPUs.

The Future Unfolds: What’s Next for the GPU?

Looking ahead, the GPU’s trajectory promises even more remarkable advancements. Innovation is accelerating at an unprecedented pace. This pace shows no signs of abating. It is driven by robust demand from emerging technologies and expanding applications. Therefore, the GPU’s future impact is poised to be even more profound than its storied past. This will continue the amazing evolution of the GPU. Indeed, the ongoing evolution of the GPU will usher in even more stunning advances.

We anticipate an exponential increase in computing power. This, in turn, will enable even more complex simulations and real-time processing capabilities. Moreover, enhanced ray tracing will continue to blur the lines between virtual and real worlds, thereby creating profoundly immersive digital experiences. Furthermore, greater integration of AI functionality is expected. This might include specialized hardware for AI workloads directly integrated into the GPU die. Ultimately, this will significantly accelerate machine learning. It will also enhance the usability of AI.

The Future of GPU Evolution

Novel architectural designs are also on the horizon. For example, multi-die GPUs, where multiple smaller chips function cohesively as a single unit, could significantly boost performance and efficiency. Furthermore, heterogeneous computing is likely to become more prevalent. This involves leveraging different types of processors (such as CPUs and GPUs) in concert to optimize task execution. Most importantly, energy efficiency will remain a paramount objective. As GPUs grow more powerful, optimizing their power consumption becomes crucial. This is pertinent for both environmental sustainability and data center operational costs.

Continued demand for AI, machine learning, high-performance computing, and cloud services will sustain the rapid expansion of the GPU market. It will also foster greater diversification within the market. The evolution of the GPU from a dedicated pixel pusher to a versatile parallel processor, moreover, stands as a testament to human ingenuity and adaptability. It exemplifies how a specialized instrument can mature into a vital catalyst for progress across numerous fields. Indeed, it is actively shaping our digital world. This reflects the ongoing evolution of the GPU.

A Legacy of Innovation and a Future Unwritten

The story of the GPU transcends mere specifications and market shares. Instead, it embodies how human ingenuity consistently pushes the boundaries of computing capabilities. From rendering simple 2D text to powering intricate AI networks, the GPU has continually redefined the realm of digital possibilities. It has, moreover, transformed entertainment, advanced scientific discovery, and now spearheads the AI revolution. [Internal Link: Understanding Artificial Intelligence]

This remarkable journey illustrates how specialized hardware can unlock new frontiers in computing. The GPU’s evolution from a single-purpose graphics chip to a versatile parallel processor is, therefore, a profound success story. It demonstrates its inherent adaptability and pivotal role in today’s digital transformation. Looking ahead, its ongoing development promises even greater strides and applications we can only begin to imagine. This ongoing evolution of the GPU is truly astonishing. [Outbound Link: NVIDIA’s Vision for AI] It certainly gives one pause for thought, doesn’t it?

What future applications do you envision for GPUs that we haven’t even conceived of yet? Please share your thoughts below! Perhaps you have an innovative idea for how these powerful processors could address novel challenges or create new forms of entertainment. [Internal Link: The Impact of Cloud Computing] [Outbound Link: Research on GPU Architecture] [Outbound Link: AMD Technologies]