Imagine a future where medical research precisely mimics human biology. Moreover, artificial intelligence will learn with the efficiency of our own brain. Rather, this isn’t science fiction. Indeed, it’s the imminent reality. In fact, this future is brought forth by advancements in Brain-on-a-Chip technology and neuromorphic computing. Furthermore, these groundbreaking fields offer more than incremental improvements. Instead, they represent a fundamental reimagining. Specifically, this applies to both biological understanding and computational design.

Fundamentally, at its core, this innovation seeks to replicate the brain’s intricate functionality and unparalleled efficiency. Consequently, these two disciplines are converging. Indeed, this promises to revolutionize drug discovery, disease modeling, and computational architecture. Therefore, let’s delve into how these brain-inspired technologies are set to redefine our technological landscape.

Understanding Brain-on-a-Chip Technology

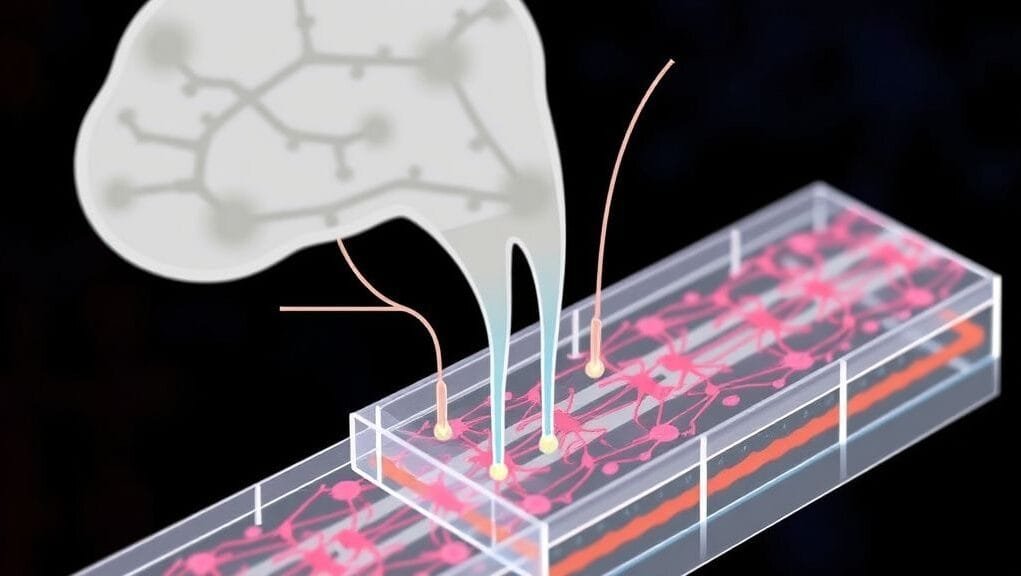

Brain-on-a-Chip technology, for example, uses microfluidic platforms. Specifically, these are engineered to recreate physiological and pathophysiological responses. Thus, they mimic human brain tissue in vitro. Furthermore, these sophisticated devices allow scientists to cultivate patient-derived neurons. Indeed, these neurons come from specific brain regions. Consequently, this provides an unprecedented window into disease development and progression. Moreover, they offer a controlled environment to rigorously test the efficacy of pharmacological treatments.

Therefore, this approach significantly boosts model reliability. This is because these platforms use human-derived cells. Consequently, they offer insights more relevant for drug screening and disease monitoring. Furthermore, this outperforms conventional animal models. In essence, you’re getting a more accurate picture of how a drug might interact with your biology.

Revolutionizing Biomedical Research with Brain Chips

Significantly, Brain-on-a-Chip technology profoundly impacts biomedical research. Indeed, it opens new avenues for understanding and treating complex conditions. Consequently, these tiny platforms are becoming indispensable tools in laboratories worldwide. Moreover, they offer precision and control previously unattainable. Therefore, this makes them invaluable for studying challenging neurological conditions.

In this regard, here’s how these innovative chips are making a difference:

Key Applications in Biomedical Research

- Disease Modeling: For instance, Brain-on-a-Chip technology proves instrumental in studying debilitating neurodegenerative diseases like Alzheimer’s and Parkinson’s. Specifically, researchers can observe complex tissue structures and cell-cell interactions within a controlled setting. In addition, researchers also use them to investigate aggressive conditions like brain cancer. Thus, this provides insights into tumor growth and therapeutic responses.

- Blood-Brain Barrier (BBB) Modeling: Initially, advanced models can now reconstitute BBB-like structures. Indeed, this is crucial for understanding how drugs traverse this highly selective barrier to reach the brain. Furthermore, scientists can develop patient-specific BBB models. By using induced pluripotent stem cell (iPSC)-derived cells, this paves the way for truly personalized medicine. Consequently, this paves the way for truly personalized medicine.

- Drug Discovery: Crucially, these platforms offer a more physiologically relevant environment than traditional 2D cell cultures or even some 3D organoid models. Consequently, Brain-on-a-Chip technology can dramatically enhance drug screening processes. Indeed, this leads to more effective, personalized medicines. Moreover, they also have fewer off-target effects.

Advanced Fabrication Techniques

Notably, these devices rely on advanced fabrication strategies. For instance, techniques such as photolithography, micromachining, and 3D printing are employed to craft sophisticated microenvironments. Thereby, they overcome traditional model limitations. Furthermore, they provide precise control over the cellular environment. Specifically, this includes fluid flow and vital biochemical cues. Ultimately, these are essential for mimicking in vivo conditions.

An infographic illustrating the multi-faceted applications of brain-on-a-chip technology in drug discovery, disease modeling, and personalized medicine.

The Dawn of Neuromorphic Computing

Initially, Brain-on-a-Chip technology focuses on biological emulation. Conversely, neuromorphic computing addresses computational challenges. Indeed, it mimics the brain’s astonishing efficiency. Therefore, this represents a paradigm shift in computational design. Specifically, the aim is to emulate neural structures and brain functionalities. Consequently, this enhances AI system efficiency and adaptability. However, traditional von Neumann architectures separate processing and memory. Instead, neuromorphic chips integrate these functions.

Moreover, this integration mirrors the biological relationship between neurons and synapses. Thus, this effectively reduces the “von Neumann bottleneck.” Consequently, this innovation leads to remarkable improvements in both energy efficiency and processing speed. Indeed, it is truly a radical departure from conventional computer science.

How Neuromorphic Chips Mimic the Brain’s Efficiency

Consider this: The human brain consumes roughly 20 watts of power. Meanwhile, it performs computations far exceeding today’s most powerful supercomputers. Conversely, those supercomputers demand megawatts. Therefore, this stark contrast highlights the potential of neuromorphic design. Furthermore, these systems are engineered for ultra-low power consumption. Thus, they offer a sustainable alternative. Indeed, this addresses the ever-increasing energy demands of traditional AI.

- Exceptional Energy Efficiency: First, neuromorphic systems prioritize ultra-low power consumption. For instance, Intel’s Loihi 2 processors exhibit remarkable energy efficiency. Specifically, this is for AI workloads. In fact, they achieve 15 trillion operations per watt (TOPS/W). Consequently, this significantly outperforms many conventional neural processing units (NPUs), which often fall below 10 TOPS/W. Moreover, some neuromorphic chips are up to 500 times more energy-efficient than GPUs for specific tasks. Similarly, IBM’s TrueNorth draws astonishingly low power. Indeed, it uses 1/10,000th the power density of a conventional von Neumann processor. Ultimately, this efficiency largely stems from their event-driven, asynchronous processing, where only active components consume power.

Key Architectural Features and Benefits

- Parallel Processing and Adaptability: Essentially, emulating the brain’s inherent parallel processing capabilities, neuromorphic chips can gracefully handle multiple tasks concurrently. Furthermore, they also adapt dynamically to changing inputs. Consequently, this facilitates real-time learning and instantaneous decision-making. Indeed, this flexibility is a game-changer for dynamic environments.

- Spiking Neural Networks (SNNs): To clarify, Spiking Neural Networks (SNNs) are a core component of neuromorphic computing. Specifically, they process information through discrete “spikes” or pulses. In this way, this is much like biological neurons. Therefore, this makes them exceptionally efficient for processing temporal and spatial data. Moreover, learning rules like Spike-Timing-Dependent Plasticity (STDP) empower these chips. Thus, they can self-learn and adapt without explicit programming.

- Overcoming Traditional AI Limitations: Generally, traditional AI models demand increasing computing power and energy. However, neuromorphic computing presents a sustainable alternative. Specifically, it achieves this by rethinking computer architecture. Ultimately, this offers a path to more environmentally friendly and scalable AI solutions.

Leading the Charge: Examples of Neuromorphic Innovation

Significantly, several groundbreaking projects and companies are at the forefront of neuromorphic computing development. Indeed, their work demonstrates the diverse approaches and impressive capabilities emerging in this exciting field. Therefore, let’s look at some key players and their contributions to Brain-on-a-Chip technology and brain-inspired computing.

Key Neuromorphic Platforms

| Neuromorphic Chip/Platform | Key Features | Performance Highlights |

|---|---|---|

| Intel Loihi Series | Research chip supporting on-chip learning, spiking networks. | Loihi 2 offers 15 TOPS/W for AI workloads. Hala Point is Intel’s largest system. It has 1,152 Loihi 2 processors. It contains 1.15 billion artificial neurons and 128 billion artificial synapses. It performs 20 quadrillion operations per second (20 petaops). It executes full neuron capacity 20 times faster than a human brain. |

| IBM TrueNorth | Utilizes a million neurons for brain-like behavior. | Draws 1/10,000th of the power density of a conventional von Neumann processor. |

| BrainScaleS | European research platform (Human Brain Project). | BrainScaleS-2 operates significantly faster than biological real-time. For example, it is 864 times faster. Legacy systems operate up to 10,000 times faster. It emulates complex neuronal dynamics. |

| SpiNNaker | European research platform (Human Brain Project). | Over a million cores, runs numerical models in real-time. Designed for large-scale brain simulations and robotics. |

| IISc “brain on a chip” | Molecular film technology for storing and processing data. | It can store and process data in 16,500 states. This occurs within a molecular film. It represents a significant advancement in brain-inspired analog computing. This project showcases alternative approaches to Brain-on-a-Chip technology for computation. |

Real-World Applications of Neuromorphic Systems

Beyond the lab, neuromorphic computing holds vast promise. Indeed, it offers transformative potential across many industries. Specifically, it processes information efficiently and adaptively. Therefore, this makes it ideal for situations needing real-time edge intelligence. Furthermore, the potential for Brain-on-a-Chip Technology to integrate with these systems is also being explored.

Diverse Use Cases

- Robotics: Initially, neuromorphic chips enable advanced sensory processing. Moreover, they also allow autonomous decision-making. Consequently, this lets robots interact more intelligently with their environment. Ultimately, imagine robots that can learn and adapt on the fly, performing complex tasks in unpredictable settings.

- IoT Devices: For instance, for internet of things (IoT) devices, neuromorphic computing facilitates on-site data processing. Specifically, this significantly reduces latency. Furthermore, it also minimizes the need to send vast data to the cloud. Ultimately, this enhances both privacy and responsiveness.

- Edge Computing: In particular, in edge computing scenarios, where resources are often limited, neuromorphic processors can efficiently handle data locally. Indeed, this is crucial for applications that require immediate insights without relying on centralized data centers.

- Autonomous Vehicles: Clearly, real-time navigation and perception are critical for autonomous vehicles. Thus, neuromorphic systems process sensor data with extreme speed and energy efficiency. Consequently, this enhances safety and responsiveness on the road.

- Healthcare: In this sector, neuromorphic computing offers many solutions. For example, these range from wearable health monitors to sophisticated prosthetics and neural interfaces. Moreover, it aids personalized health management and restorative technologies. Indeed, these systems could provide unprecedented levels of data analysis and control.

- Aerospace and Defense: Due to their design, neuromorphic systems are resilient and efficient. Therefore, this suits them for demanding aerospace and defense applications. In these environments, robust and adaptive computing is paramount.

Navigating the Hurdles: Challenges and Future Outlook

Nevertheless, despite the immense promise of both Brain-on-a-Chip technology and neuromorphic computing, significant hurdles remain. Moreover, these technologies are still nascent. Ultimately, overcoming these challenges will require sustained interdisciplinary effort. Furthermore, it also needs significant investment. However, the path forward is exciting, but also complex.

Key Challenges Ahead

- Biological Complexity: For example, replicating the brain’s intricate neural networks is challenging. Specifically, its structural connections and dynamic cell interactions are difficult to mimic. Furthermore, this occurs within Brain-on-a-Chip technology devices. Indeed, the human brain is the most complex known object in the universe, making its full emulation incredibly difficult.

- Neuromorphic Hardware Limitations: Currently, neuromorphic processors, such as Intel’s Loihi, still struggle with high processing latency in some applications. Furthermore, scalability issues persist due to the unique training and replication requirements of these novel systems. Therefore, building these at scale efficiently is a major engineering task.

Broader Implications and Development Hurdles

- Software and Programming: As a result, developing software and algorithms specifically designed for Spiking Neural Networks (SNNs) is inherently complex. Specifically, there is a lack of established tools, frameworks, and benchmarks. Conversely, this contrasts with the well-developed ecosystem for conventional deep learning models. Consequently, this gap needs to be filled for wider adoption.

- Interdisciplinary Nature: Furthermore, neuromorphic computing is highly interdisciplinary. Indeed, it demands seamless collaboration among many experts. For example, these include neuroscientists, computer scientists, electrical engineers, and physicists. However, this inherently adds to its complexity but also fuels its innovative potential.

- Ethical Concerns: Importantly, neuromorphic systems are advancing. Notably, this is true for areas like brain-computer interfaces. Consequently, critical ethical concerns emerge regarding privacy, security, and consciousness. Therefore, society must grapple with these questions as the technology evolves.

Despite this, traditional GPUs still dominate AI. Specifically, this is due to their immense scalability and mature ecosystem. However, neuromorphic processors offer a different, energy-efficient, and highly adaptive approach. Indeed, the full potential of neuromorphic chips awaits. Ultimately, it requires a deeper understanding of how the brain computes. However, ongoing research and significant investments by technology giants like Intel and IBM are steadily paving the way. Thus, they are laying the groundwork for a future. In this future, brain-inspired computing will be integral and transformative. This will be fueled by advances in Brain-on-a-Chip technology. Consequently, it will reshape our technological landscape.

What excites you most about brain-inspired computing? What is its potential impact on society? Share your thoughts in the comments below!